Difference between revisions of "Combinatory logic"

EndreyMark (talk | contribs) m (Stylistic clarifying: only one of the Maybe-constructors is common with an Either-constructor) |

m (grammar fix) |

||

| (47 intermediate revisions by 5 users not shown) | |||

| Line 1: | Line 1: | ||

| + | [[Category:Combinators]] |

||

| − | = Portals and other large-scale resources = |

||

| + | == General == |

||

| + | |||

| + | Although combinatory logic has precursors, it was Moses Schönfinkel who first explored combinatory logic as such. Later the work was continued by Haskell B. Curry. Combinatory logic was developed as a theory for the foundation of [[mathematics]] [Bun:NatICL], and it has relevance in [[linguistics]] too. |

||

| + | |||

| + | Its goal was to understand paradoxes better, and to establish fundamental mathematical concepts on simpler and cleaner principles than the existing mathematical frameworks, especially to understand better the concept of ''substitution''. Its “lack of (bound) variables” relates combinatory logic to the [[pointfree]] style of programming. (For contrast, see a very different approach which also enables full elimination of variables: [[recursive function theory]]) |

||

| + | |||

| + | General materials: |

||

| + | * Jonathan P. Seldin: [http://people.uleth.ca/~jonathan.seldin/CAT.pdf Curry’s anticipation of the types used in programming languages] (it is also an introduction to illative combinatory logic) |

||

| + | * Jonathan P. Seldin: [http://people.uleth.ca/~jonathan.seldin/CCL.pdf The Logic of Curry and Church] (it is also an introduction to illative combinatory logic) |

||

| + | * Henk Barendregt: [http://www.math.ucla.edu/~asl/bsl/0302/0302-003.ps The Impact of the Lambda-Calculus in Logic and Computer Science] (The Bulletin of Symbolic Logic Volume 3, Number 2, June 1997). Besides theoretical relevance, the article provides implementations of recursive datatypes in CL |

||

| + | * [http://users.bigpond.net.au/d.keenan/Lambda/index.htm To Dissect a Mockingbird] and also [http://www.lns.cornell.edu/spr/2001-03/msg0031750.html Re: category theory <-> lambda calculus?], found on a [http://lambda-the-ultimate.org/classic/message4000.html Lambda the Ultimate] site. The links to the ''To Dissect a Mockingbird'' site given by these pages seem to be broken, but I found a new one (so the link given here is correct). |

||

| + | |||

| + | === Portals and other large-scale resources === |

||

* [http://en.wikipedia.org/wiki/Combinatory_logic Wikipedia's] |

* [http://en.wikipedia.org/wiki/Combinatory_logic Wikipedia's] |

||

* [http://planetmath.org/encyclopedia/CombinatoryLogic.html PlanetMath's] |

* [http://planetmath.org/encyclopedia/CombinatoryLogic.html PlanetMath's] |

||

| + | == Applications == |

||

| − | = Implementing CL = |

||

| + | |||

| + | Of course combinatory logic has significance in the foundations of [[mathematics]], or in functional programming, [[computer science]]. For example, see [[Chaitin's construction]]. |

||

| + | |||

| + | It is interesting that it can be important also in some [[Libraries and tools/Linguistics|linguistical]] theories. See especially the theory of [[Libraries and tools/Linguistics/Applicative universal grammar|applicative universal grammar]], it uses many important thoughts from combinatory logic. |

||

| + | |||

| + | [[Lojban]] is an artificial language (and, unlike the more a posteriori Esperanto, it is rather of an a priori taste). It is a human language, capable of expressing everything. Its grammar uses (among others) things taken from mathematical logic, e.g. predicate-like structures. Although its does not make use combinatory logic directly (even, from a category logic / functional programming point of view, it uses also rather imperative ideas), but it may give hints and analogies, how combinatry logic can be useful in [[Libraries and tools/Linguistics|linguistics]]. |

||

| + | |||

| + | == Implementing CL == |

||

| + | |||

| + | * Talks about it at [http://comments.gmane.org/gmane.comp.lang.haskell.cafe/11408 haskell-cafe] <math>\subset</math> [http://www.mail-archive.com/haskell-cafe@haskell.org/msg12705.html haskell-cafe ] |

||

| + | * Lot of interpreters at [http://homepages.cwi.nl/~tromp/cl/cl.html John's Lambda Calculus and Combinatory Logic Playground]. |

||

| + | * [http://home.nvg.org/~oerjan/esoteric/ Unlambda resources] concerning [http://www.madore.org/~david/ David Madore]'s combinatory logic programming language [http://www.madore.org/~david/programs/unlambda/ Unlambda] |

||

| + | ** an [http://home.nvg.org/~oerjan/esoteric/Unlambda.hs implementation of Unlambda in Haskell] |

||

| + | ** another [http://www.cse.unsw.edu.au/~dons/code/lambdabot/scripts/Unlambda.hs implementation of Unlambda in Haskell], for use by [[Lambdabot]] |

||

| + | ** an [http://home.nvg.org/~oerjan/esoteric/interpreter.unl Unlambda metacircular interpeter] |

||

| + | * [http://physis.fx3.hu/ CL++], a lazy-evaluating combinatory logic interpreter with some computer algebra service: e.g. it can reply the question <math>\mathbf+\;\mathbf2\;\mathbf3;</math> with <math>\mathbf5</math> instead of a huge amount of parantheses and <math>\mathbf K</math>, <math>\mathbf S</math> combinators. Unfortunately I have not written it directly in English, so all documentations, source code and libraries are in Hungarian. I want to rewrite it using more advanced Haskell programming concepts (e.g. monads or [[attribute grammar]]s) and directly in English. |

||

| + | == Base == |

||

| − | * Talks about it at [http://www.mail-archive.com/haskell-cafe@haskell.org/msg12705.html haskell-cafe ] <math>\subset</math> [http://comments.gmane.org/gmane.comp.lang.haskell.cafe/11408 haskell-cafe] |

||

| + | Some thoughts on base combinators and on the relatedness of their rules to other topics |

||

| − | * Lot of interpreters at [http://homepages.cwi.nl/~tromp/cl/cl.html John's Lambda Calculus and Combinatory Logic Playground] |

||

| + | * Conal Elliott's [http://www.mail-archive.com/haskell-cafe@haskell.org/msg00549.html reply to thread ''zips and maps''] |

||

| − | * [http://physis.fx3.hu/ CL++], a lazy-evaluating combinatory logic interpreter with some computer algebra service: e.g. it can reply the question <math>+\;2\; 3;</math> with <math>5</math> instead of a huge amount of parantheses and K, S combinators. Unfortunately I have not written it directly in English, so all documantations, source code and libraries are in Hungarian. I want to rewrite it using more advanced Haskell programming concepts (e.g. monads or attribute grammars) and directly in English. |

||

| + | * [http://en.wikipedia.org/wiki/Combinatory_logic#Logic Intuitionistic fragment of propositional logic] |

||

| + | * Records in function: in set theory and database theory, we regard functions as consisting of more elementary parts, records: a function <math>f</math> can be regarded as the set of all its records. A record is a pair of a key and its value, and for functions we expect unicity (and sometimes stress this requirement by writing <math>x \mapsto x'</math> instead of <math>\left\langle x,\;y\right\rangle</math>).Sometimes I think of <math>\mathbf S</math> as having a taste of record selection: <math>\mathbf S\;c\;f\;x</math> selects a record determinated by key <math>x</math> in function <math>f</math> (as in a database), and returns the found record (i.e. corresponding key and value) contained in the <math>c</math> container ([[continuation]]). Is this thought just a toy or can it be brought further? Does it explain why <math>\mathbf S</math> and <math>\mathbf K</math> can constitute a base? |

||

| + | * Also bracket abstraction gives us a natural way to understand the seemingly rather unintuitive and artificial <math>\mathbf S</math> combinator better |

||

| − | = Programming in CL = |

+ | == Programming in CL == |

| − | I think many thoughts from John Hughes' [http://www.math.chalmers.se/~rjmh/Papers/whyfp.html Why Functional Programming Matters] can be applied to programming in Combinatory Logic. And almost all concepts used in the Haskell world (catamorphisms etc.) |

+ | I think many thoughts from John Hughes' [http://www.math.chalmers.se/~rjmh/Papers/whyfp.html Why Functional Programming Matters] can be applied to programming in Combinatory Logic. And almost all concepts used in the Haskell world (catamorphisms etc.) help us a lot here too. Combinatory logic is a powerful and concise programming language. I wonder how functional logic programming could be done by using the concepts of Illative combinatory logic, too. |

| − | == Datatypes == |

+ | === Datatypes === |

| − | === Continuation passing for polynomial datatypes === |

+ | ==== Continuation passing for polynomial datatypes ==== |

| − | ==== Direct product ==== |

+ | ===== Direct product ===== |

Let us begin with a notion of the ordered pair and denote it by <math>\Diamond_2</math>. |

Let us begin with a notion of the ordered pair and denote it by <math>\Diamond_2</math>. |

||

We know this construct well when defining operations for booleans |

We know this construct well when defining operations for booleans |

||

| − | + | : <math>\mathbf{true} \equiv \mathbf K</math> |

|

| − | + | : <math>\mathbf{false} \equiv \mathbf{K_*}</math> |

|

| − | + | : <math>\mathbf{not} \equiv \mathbf{\Diamond_2}\;\mathbf{false}\;\mathbf{true}</math> |

|

| − | and Church numbers. I think, in generally, when defining datatypes in a continuation-passing way (e.g. Maybe or direct sum), then operations on so-defined datatypes often turn to be well-definable by some <math>\Diamond_n</math>. |

+ | and Church numbers. I think, in generally, when defining datatypes in a continuation-passing way (e.g. Maybe or direct sum), then operations on so-defined datatypes often turn to be well-definable by some <math>\mathbf{\Diamond_n}</math>. |

We define it with |

We define it with |

||

| − | + | : <math>\mathbf{\Diamond_2} \equiv \lambda\;x\;y\;f\;.\;f\;x\;y</math> |

|

| − | in <math>lambda</math>-calculus and |

+ | in <math>\lambda</math>-calculus and |

| − | + | : <math>\mathbf{\Diamond_2} \equiv\;\mathbf{C_{(1)}}\;\mathbf{C_*}</math> |

|

in combinatory logic. |

in combinatory logic. |

||

| Line 36: | Line 68: | ||

* as the <math>\langle\dots\rangle</math> construct can be generalized to any natural number <math>n</math> (the concept of <math>n</math>-tuple, see Barendregt's <math>\lambda</math> Calculus) |

* as the <math>\langle\dots\rangle</math> construct can be generalized to any natural number <math>n</math> (the concept of <math>n</math>-tuple, see Barendregt's <math>\lambda</math> Calculus) |

||

* and in this generalized scheme <math>\mathbf I</math> corresponds to the 0 case, <math>\mathbf{C_*}</math> to the 1 case, and the ordered pair construct <math>\Diamond_2</math> to the 2 case, as though defining |

* and in this generalized scheme <math>\mathbf I</math> corresponds to the 0 case, <math>\mathbf{C_*}</math> to the 1 case, and the ordered pair construct <math>\Diamond_2</math> to the 2 case, as though defining |

||

| − | + | : <math>\mathbf{\Diamond_0} \equiv \mathbf I</math> |

|

| − | + | : <math>\mathbf{\Diamond_1} \equiv \mathbf{C_*}</math> |

|

so we can write definition |

so we can write definition |

||

| − | + | : <math>\mathbf{\Diamond_2} \equiv \mathbf{C_{(1)}}\;\mathbf{C_*}</math> |

|

or the same |

or the same |

||

| − | + | : <math>\mathbf{\Diamond_2} \equiv \mathbf C \cdot \mathbf{C_*}</math> |

|

in a more interesting way: |

in a more interesting way: |

||

| − | + | : <math>\mathbf{\Diamond_2} \equiv \mathbf C\cdot\mathbf{\Diamond_1}</math> |

|

Is this generalizable? I do not know. |

Is this generalizable? I do not know. |

||

| − | I know an analogy in the case of < |

+ | I know an analogy in the case of <math>\mathbf{left}</math>, <math>\mathbf{right}</math>, <math>\mathbf{just}</math>, <math>\mathbf{nothing}</math>. |

| − | ==== Direct sum ==== |

+ | ===== Direct sum ===== |

The notion of ordered pair mentioned above really enables us to deal with direct products. What about it dual concept? How to make direct sums in Combinatory Logic? And after we have implemented it, how can we see that it is really a dual concept of direct product? |

The notion of ordered pair mentioned above really enables us to deal with direct products. What about it dual concept? How to make direct sums in Combinatory Logic? And after we have implemented it, how can we see that it is really a dual concept of direct product? |

||

| Line 53: | Line 85: | ||

A nice argument described in David Madore's [http://www.madore.org/~david/programs/unlambda/ Unlambda] page gives us a continuation-passig style like solution. |

A nice argument described in David Madore's [http://www.madore.org/~david/programs/unlambda/ Unlambda] page gives us a continuation-passig style like solution. |

||

We expect reductions like |

We expect reductions like |

||

| − | + | : <math>\mathbf{left}\;x \to \lambda\;f\;g\;.\;f\;x</math> |

|

| − | + | : <math>\mathbf{right}\;x \to \lambda\;f\;g\;.\;g\;x</math> |

|

so we define |

so we define |

||

| − | + | : <math>\mathbf{left} \equiv \lambda\;x\;f\;g\;.\;f\;x</math> |

|

| − | + | : <math>\mathbf{right} \equiv \lambda\;x\;f\;g\;.\;g\;x</math> |

|

now we translate it from <math>\lambda</math>-calculus into combinatory logic: |

now we translate it from <math>\lambda</math>-calculus into combinatory logic: |

||

| − | + | : <math>\mathbf{left} \equiv \mathbf{K_{(2)}}\;\mathbf{C_*}</math> |

|

| − | + | : <math>\mathbf{right} \equiv \mathbf{K_{(1)}}\;\mathbf{C_*}</math> |

|

| − | Of course, we can recognize Haskell's |

+ | Of course, we can recognize Haskell's direct sum construct |

| + | <haskell> |

||

| + | Either (Left, Right) |

||

| + | </haskell> |

||

| + | implemented in an analogous way. |

||

| − | ==== Maybe ==== |

+ | ===== Maybe ===== |

| − | Let us remember Haskell's < |

+ | Let us remember Haskell's <hask>maybe</hask>: |

| + | <haskell> |

||

maybe :: a' -> (a -> a') -> Maybe a -> a' |

maybe :: a' -> (a -> a') -> Maybe a -> a' |

||

maybe n j Nothing = n |

maybe n j Nothing = n |

||

maybe n j (Just x) = j x |

maybe n j (Just x) = j x |

||

| + | </haskell> |

||

thinking of |

thinking of |

||

* ''n'' as nothing-continuation |

* ''n'' as nothing-continuation |

||

* ''j'' as just-continuation |

* ''j'' as just-continuation |

||

In a continuation passing style approach, if we want to implement something like the Maybe constuct in <math>\lambda</math>-calculus, then we may expect the following reductions: |

In a continuation passing style approach, if we want to implement something like the Maybe constuct in <math>\lambda</math>-calculus, then we may expect the following reductions: |

||

| − | + | : <math>\mathbf{nothing} \equiv \lambda\;n\;j\;.\;n</math> |

|

| − | + | : <math>\mathbf{just}\;x\;\to\;\lambda\;n\;j\;.\;j\;x</math> |

|

| − | we know both of them well, one is just |

+ | we know both of them well, one is just <math>\mathbf K</math>, and we remember the other too from the direct sum: |

| − | + | : <math>\mathbf{nothing} \equiv \mathbf K</math> |

|

| − | + | : <math>\mathbf{just} \equiv \mathbf{right}</math> |

|

thus their definition is |

thus their definition is |

||

| − | + | : <math>\mathbf{nothing} \equiv \mathbf K</math> |

|

| − | + | : <math>\mathbf{just} \equiv \mathbf{K_{(1)}}\;\mathbf{C_*}</math> |

|

| − | where both < |

+ | where both <math>\mathbf{just}</math> and <math>\mathbf{right}</math> have a common definition. |

| − | === Catamorphisms for recursive datatypes === |

||

| − | ==== |

+ | ===== Maybe2 ===== |

| + | Haskell: |

||

| − | Let us define the concept of list by its catamorphism (see Haskell's <code>foldr</code>): |

||

| + | |||

| + | <haskell> |

||

| + | module Maybe2 (Maybe2, maybe2, nothing2, just2) where |

||

| + | |||

| + | data Maybe2 a b = Nothing2 | Just2 a b |

||

| + | |||

| + | maybe2 :: maybe2ab' -> (a -> b -> maybe2ab') -> Maybe2 a b -> maybe2ab' |

||

| + | maybe2 nothing2Cont _ Nothing2 = nothing2Cont |

||

| + | maybe2 _ just2Cont (Just2 a b) = just2Cont a b |

||

| + | |||

| + | nothing2 :: Maybe2 a b |

||

| + | nothing2 = Nothing2 |

||

| + | |||

| + | just2 :: a -> b -> Maybe2 a b |

||

| + | just2 = Just2 |

||

| + | </haskell> |

||

| + | |||

| + | Expected reductions: |

||

| + | |||

| + | :<math>\mathbf{nothing2}\;n\;j \ge n</math> |

||

| + | :<math>\mathbf{just2}\;a\;b\;n\;j \ge j\;a\;b</math> |

||

| + | |||

| + | Other argument orders are possible, too, but they lead to more complicated solutions than the following: |

||

| + | |||

| + | Combinators: |

||

| + | |||

| + | :<math>\mathbf{nothing2} \equiv \mathbf K</math> |

||

| + | :<math>\mathbf{just2} \equiv \mathbf{K_{\left(2\right)}} \mathbf{\Diamond_2}</math> |

||

| + | |||

| + | |||

| + | Conjecture for generalisation: |

||

| + | :<math>\mathbf{nothing_n} \equiv \mathbf K</math> |

||

| + | :<math>\mathbf{just_n} \equiv \mathbf{K_{\left(n\right)}} \mathbf{\Diamond_n}</math> |

||

| + | with straightforward generalisations, e.g. |

||

| + | :<math>\mathbf{\Diamond_1} \equiv \mathbf{C_*}</math> |

||

| + | |||

| + | ==== Catamorphisms for recursive datatypes ==== |

||

| + | |||

| + | ===== List ===== |

||

| + | |||

| + | Let us define the concept of list by its catamorphism (see Haskell's <hask>foldr</hask>function): |

||

a list (each concrete list) is a function taking two arguments |

a list (each concrete list) is a function taking two arguments |

||

* a two-parameter function argument (cons-continuation) |

* a two-parameter function argument (cons-continuation) |

||

| Line 95: | Line 173: | ||

and returns a value coming from a term consisting of applying cons-continuations and nil-continuations in the same shape as the correspondig list. |

and returns a value coming from a term consisting of applying cons-continuations and nil-continuations in the same shape as the correspondig list. |

||

E. g. in case of having defined |

E. g. in case of having defined |

||

| − | + | : <math>\mathbf{oneTwoThree} \equiv \mathbf{cons}\;\mathbf1\;\left( \mathbf{cons}\;\mathbf2\;\left(\mathbf{cons}\;\mathbf3\;\mathbf{nil}\right) \right)</math> |

|

the expression |

the expression |

||

| − | + | : <math>\mathbf{oneTwoThree}\;\mathbf+\;\mathbf0</math> |

|

reduces to |

reduces to |

||

| + | : <math>\mathbf+\;\mathbf1\;\left(\mathbf+\;\mathbf2\; \left(\mathbf+\;\mathbf3\;\mathbf0\right)\right)</math> |

||

| − | * + 1 (+ 2 (+ 3 0)) |

||

| − | But how to define < |

+ | But how to define <math>\mathbf{cons}</math> and <math>\mathbf{nil}</math>? |

In <math>\lambda</math>-calculus, we should like to see the following reductions: |

In <math>\lambda</math>-calculus, we should like to see the following reductions: |

||

| − | + | : <math>\mathbf{nil}\;c\;n\;\to\;\;n</math> |

|

| − | + | : <math>\mathbf{cons}\;h\;t\; \to \;\lambda\;c\;n\;.\;c\;h\;\left(t\;c\;n\right)</math> |

|

Let us think of the variables as <math>h</math> denoting head, <math>t</math> denoting tail, <math>c</math> denoting cons-continuation, and <math>n</math> denoting nil-continuation. |

Let us think of the variables as <math>h</math> denoting head, <math>t</math> denoting tail, <math>c</math> denoting cons-continuation, and <math>n</math> denoting nil-continuation. |

||

Thus, we could achieve this goal with the following definitions: |

Thus, we could achieve this goal with the following definitions: |

||

| − | + | : <math>\mathbf{nil} \equiv \lambda\;c\;n\;.\;n</math> |

|

| − | + | : <math>\mathbf{cons} \equiv \lambda\;h\;t\;c\;n\;.\;c\;h\;\left(t\;c\;n\right)</math> |

|

Using the formulating combinators described in Haskell B. Curry's Combinatory Logic I, we can translate these definitions into combinatory logic without any pain: |

Using the formulating combinators described in Haskell B. Curry's Combinatory Logic I, we can translate these definitions into combinatory logic without any pain: |

||

| − | + | : <math>\mathbf{nil} \equiv \mathbf K_*</math> |

|

| − | + | : <math>\mathbf{cons} \equiv \mathbf B \left( \mathbf{\Phi}\;\mathbf B \right) \mathbf{C_*}</math> |

|

Of course we could use the two parameters in the opposite order, but I am not sure yet that it would provide a more easy way. |

Of course we could use the two parameters in the opposite order, but I am not sure yet that it would provide a more easy way. |

||

| Line 118: | Line 196: | ||

A little practice: let us define concat. |

A little practice: let us define concat. |

||

In Haskell, we can do that by |

In Haskell, we can do that by |

||

| + | <haskell> |

||

concat = foldr (++) [] |

concat = foldr (++) [] |

||

| + | </haskell> |

||

which corresponds in cominatory logic to reducing |

which corresponds in cominatory logic to reducing |

||

| − | + | : <math>\mathbf{concat}\;l\equiv l\;\mathbf{append}\;\mathbf{nil}</math> |

|

Let us use the ordered pair (direct product) construct: |

Let us use the ordered pair (direct product) construct: |

||

| − | + | : <math>\mathbf{concat}\equiv \mathbf{\Diamond_2}\;\mathbf{append}\;\mathbf{nil}</math> |

|

| − | and if I use that nasty < |

+ | and if I use that nasty <math>\mathbf{centred}</math> (see later) |

| − | + | : <math>\mathbf{concat} \equiv \mathbf{centred}\;\mathbf{append}</math> |

|

| − | == Monads in Combinatory Logic? == |

+ | === Monads in Combinatory Logic? === |

| − | === Concrete monads === |

+ | ==== Concrete monads ==== |

| − | ==== |

+ | ===== Maybe as a monad ===== |

| + | ====== return ====== |

||

| + | Implementing the <hask>return</hask> monadic method for the <hask>Maybe</hask> monad is rather straightforward, both in Haskell and CL: |

||

| + | <haskell> |

||

| + | instance Monad Maybe |

||

| + | return = Just |

||

| + | ... |

||

| + | </haskell> |

||

| + | in Haskell and |

||

| + | : <math>\mathbf{maybe\!\!-\!\!return} \equiv \mathbf{just}</math> |

||

| + | in combinatory logic. |

||

| + | ====== map ====== |

||

| + | |||

| + | Haskell: |

||

| + | <haskell> |

||

| + | instance Functor Maybe where |

||

| + | map f = maybe Nothing (Just . f) |

||

| + | </haskell> |

||

| + | <math>\lambda</math>-calculus: |

||

| + | Expected reductions: |

||

| + | : <math>\mathbf{maybe\!\!-\!\!map}\;f\;p\;\to\;p\;\mathbf{nothing}\;\left(\mathbf{just_{(1)}}\;f\right)</math> |

||

| + | |||

| + | Definition: |

||

| + | : <math>\mathbf{maybe\!\!-\!\!map}\equiv \lambda\;f\;p\;.\;p\;\mathbf{nothing}\;\left(\mathbf{just_{(1)}}\;f\right)</math> |

||

| + | |||

| + | Combinatory logic: we expect the same reduction here too |

||

| + | : <math>\mathbf{maybe\!\!-\!\!map}\;f\;p\;\to\;p\;\mathbf{nothing}\;\left(\mathbf{just_{(1)}}\;f\right)</math> |

||

| + | let us get rid of one parameter: |

||

| + | : <math>\mathbf{maybe\!\!-\!\!map}\;f\;\to\;\mathbf{\Diamond_2}\;\mathbf{nothing}\;\left(\mathbf{just_{(1)}}\;f\right)</math> |

||

| + | now we have the definition: |

||

| + | : <math>\mathbf{maybe\!\!-\!\!map}\equiv \mathbf{\Diamond_2}\;\mathbf{nothing}\;\cdot\;\mathbf{just_{(1)}}</math> |

||

| + | |||

| + | ====== bind ====== |

||

| + | Haskell: |

||

| + | <haskell> |

||

| + | instance Monad Maybe (>>=) where |

||

| + | (>>=) f p = maybe Nothing f |

||

| + | </haskell> |

||

| + | <math>\lambda</math>-calculus: we expect |

||

| + | : <math>\mathbf{maybe\!\!-\!\!=\!\!<\!\!<}\;f\;p\;\to\;p\;\mathbf{nothing}\;f</math> |

||

| + | achieved by defintion |

||

| + | : <math>\mathbf{maybe\!\!-\!\!=\!\!<\!\!<} \equiv \lambda\;f\;p\;.\;p\;\mathbf{nothing}\;f</math> |

||

| + | |||

| + | In combinatory logic the above expected reduction |

||

| + | : <math>\mathbf{maybe\!\!-\!\!=\!\!<\!\!<}\;f\;p\;\to\;p\;\mathbf{nothing}\;f</math> |

||

| + | getting rid of the outest parameter |

||

| + | : <math>\mathbf{maybe\!\!-\!\!=\!\!<\!\!<}\;f\;\to\;\mathbf{\Diamond_2}\;\mathbf{nothing}\;f</math> |

||

| + | yielding definition |

||

| + | : <math>\mathbf{maybe\!\!-\!\!=\!\!<\!\!<} \equiv \mathbf{\Diamond_2}\;\mathbf{nothing}</math> |

||

| + | and of course |

||

| + | : <math>\mathbf{maybe\!\!-\!\!\!>\!\!>\!\!=} \equiv \mathbf C\;\mathbf{maybe\!\!-\!\!=\!\!<\!\!<}</math> |

||

| + | |||

| + | But the other way (starting with a better chosen parameter order) is much better: |

||

| + | : <math>\mathbf{maybe\!\!-\!\!\!>\!\!>\!\!=}\;p\;f\;\to\;p\;\mathbf{nothing}\;f</math> |

||

| + | : <math>\mathbf{maybe\!\!-\!\!\!>\!\!>\!\!=}\;p\;\to\;p\;\mathbf{nothing}</math> |

||

| + | yielding the much simplier and more efficient definition: |

||

| + | : <math>\mathbf{maybe\!\!-\!\!\!>\!\!>\!\!=} \equiv \mathbf{C_*}\;\mathbf{nothing}</math> |

||

| + | |||

| + | We know already that <math>\mathbf{C_*}</math> can be seen as as a member of the scheme of tuples: <math>\mathbf{\Diamond_n}</math> for <math>n=1</math> case. |

||

| + | As the tupe construction is a usual guest at things like this (we shall meet it at list and other maybe-operations like <math>\mathbf{maybe\!\!-\!\!join}</math>), |

||

| + | so us express the above definition with <math>\mathbf{C_*}</math> denoted as <math>\Diamond_1</math>: |

||

| + | : <math>\mathbf{maybe\!\!-\!\!\!>\!\!>\!\!=} \equiv \mathbf{\Diamond_1}\;\mathbf{nothing}</math> |

||

| + | hoping that this will enable us some interesting generalization in the future. |

||

| + | |||

| + | But why we have not made a more brave generalization, and express monadic bind from monadic join and map? Later in the list monad, we shall see that it may be better to avoid this for sake of [[deforestation]]. Here a maybe similar problem will appear: the problem of superfluous <math>\mathbf I</math>. |

||

| + | |||

| + | ====== join ====== |

||

| + | : <math>\mathbf{maybe\!\!-\!\!join} \equiv \mathbf{\Diamond_2}\;\mathbf{nothing}\;\mathbf I</math> |

||

| + | We should think of changing the architecture if we suspect that we could avoid <math>\mathbf I</math> and solve the problem with a more simple construct. |

||

| + | |||

| + | |||

| + | ===== The list as a monad ===== |

||

| − | Let us think of our list-operations as implementing monadic methods of the list monad. We |

+ | Let us think of our list-operations as implementing monadic methods of the list monad. We can express this by definitions too, e.g. |

we could name |

we could name |

||

| − | + | : <math>\mathbf{list\!\!-\!\!join} \equiv \mathbf{concat} |

|

</math> |

</math> |

||

Now let us see mapping a list, concatenating a list, binding a list. |

Now let us see mapping a list, concatenating a list, binding a list. |

||

Mapping and binding have a common property: yielding nil for nil. |

Mapping and binding have a common property: yielding nil for nil. |

||

| − | I shall say these operations are ''centred'': their definition would contain a <math>\mathbf C\;\Diamond_2\;\ |

+ | I shall say these operations are ''centred'': their definition would contain a <math>\mathbf C\;\mathbf{\Diamond_2}\;\mathbf{nil}</math> subexpression. Thus I shall give a name to this subexpression: |

| − | + | : <math>\mathbf{centred} \equiv \mathbf C\;\mathbf{\Diamond_2}\;\mathbf{nil}</math> |

|

Now let us define map and bind for lists: |

Now let us define map and bind for lists: |

||

| − | + | : <math>\mathbf{list\!\!-\!\!map} \equiv \mathbf{centred}_{(1)}\;\mathbf{cons}_{(1)}</math> |

|

| − | + | : <math>\mathbf{list\!\!-\!\!=\!\!<\!\!<} \equiv \mathbf{centred}_{(1)}\;\mathbf{append}_{(1)}</math> |

|

| − | now we see it was worth of defining a common <math>\ |

+ | now we see it was worth of defining a common <math>\mathbf{centred}</math>. |

| − | But to tell the truth, it may be a trap. < |

+ | But to tell the truth, it may be a trap. <math>\mathbf{centred}</math> breaks a symmetry: we should always define the cons and nil part of the foldr construct on the same level, always together. Modularization should be pointed towards this direction, and not to run forward into the T-street of <math>\mathbf{centred}</math>. |

Another remark: of course we can get the monadic bind for lists |

Another remark: of course we can get the monadic bind for lists |

||

| − | + | : <math>\mathbf{list\!\!-\!\!\!>\!\!>\!\!=} \equiv \mathbf{C}\;\mathbf{list\!\!-\!\!=\!\!<\!\!<}</math> |

|

| − | But we used < |

+ | But we used <math>\mathbf{append}</math> here. How do we define it? It is surprisingly simple. Let us think how we would define it in Haskell by <hask>foldr</hask> function, if it was not defined already as <hask>++</hask> defined in Prelude: |

In defining |

In defining |

||

| + | <haskell> |

||

(++) list1 list2 |

(++) list1 list2 |

||

| + | </haskell> |

||

| − | we can do it by <code>foldr</code>: |

||

| + | we can do it by <hask>foldr</hask>: |

||

| + | <haskell> |

||

(++) [] list2 = list2 |

(++) [] list2 = list2 |

||

(++) (a : as) list2 = a : (++) as list2 |

(++) (a : as) list2 = a : (++) as list2 |

||

| + | </haskell> |

||

thus |

thus |

||

| + | <haskell> |

||

(++) list1 list2 = foldr (:) list2 list1 |

(++) list1 list2 = foldr (:) list2 list1 |

||

| + | </haskell> |

||

let us se how we should reduce its corresponding expression in Combinatory Logic: |

let us se how we should reduce its corresponding expression in Combinatory Logic: |

||

| − | + | : <math>\mathbf{append}\;l\;m \to l\;\mathbf{cons}\;m</math> |

|

thus |

thus |

||

| − | + | : <math>\mathbf{append}\,l\,m = l\,\mathbf{cons}\,m</math> |

|

| − | + | : <math>\mathbf{append}\;l =\!\!_1\;l\;\mathbf{cons}</math> |

|

| − | + | : <math>\mathbf{append} \equiv \mathbf{C_*}\;\mathbf{cons} |

|

</math> |

</math> |

||

| Line 170: | Line 327: | ||

Defining the other monadic operation: return for lists is easy: |

Defining the other monadic operation: return for lists is easy: |

||

| + | <haskell> |

||

instance Monad [] where |

instance Monad [] where |

||

return = (: []) |

return = (: []) |

||

| + | </haskell> |

||

in Haskell -- we know, |

in Haskell -- we know, |

||

| + | <haskell> |

||

(: []) |

(: []) |

||

| + | </haskell> |

||

translates to |

translates to |

||

| + | <haskell> |

||

return = flip (:) [] |

return = flip (:) [] |

||

| + | </haskell> |

||

| − | so |

||

| + | so we can see how to do it in combinatory logic: |

||

| − | * <math>\mathrm{list\!\!-\!\!return} \equiv \mathbf C\;\mathrm{cons}\;\mathrm{nil}</math> |

||

| + | : <math>\mathbf{list\!\!-\!\!return} \equiv \mathbf C\;\mathbf{cons}\;\mathbf{nil}</math> |

||

| − | === How to AOP with monads in Combinatory Logic? === |

+ | ==== How to AOP with monads in Combinatory Logic? ==== |

We have defined monadic list in CL. Of course we can make monadic Maybe, binary tree, Error monad with direct sum constructs... |

We have defined monadic list in CL. Of course we can make monadic Maybe, binary tree, Error monad with direct sum constructs... |

||

| − | But separation of concerns by monads is more than having a bunch of special monads. It requires other possibilities too: e.g. being able to use monads generally, which can become any concrete mondads. |

+ | But [[separation of concerns]] by monads is more than having a bunch of special monads. It requires other possibilities too: e.g. being able to use monads generally, which can become any concrete mondads. |

Of course my simple CL interpreter does not know anything on type classes, overloading. But there is a rather restricted andstatic possibility provided by the concept of ''definition'' itself: |

Of course my simple CL interpreter does not know anything on type classes, overloading. But there is a rather restricted andstatic possibility provided by the concept of ''definition'' itself: |

||

| − | + | : <math>\mathbf{work} \equiv \mathbf{A\!\!-\!\!\!>\!\!>\!\!>\!\!=}\;\mathbf{subwork\!\!-\!\!1}\;\mathbf{parametrized\!\!-\!\!subwork\!\!-\!\!2}</math> |

|

and later we can change the binding mode named A e.g. from a failure-handling Maybe-like one to a more general indeterminism-handling list-like one, then we can do that simply by replacing definition |

and later we can change the binding mode named A e.g. from a failure-handling Maybe-like one to a more general indeterminism-handling list-like one, then we can do that simply by replacing definition |

||

| − | + | : <math>\mathbf{A\!\!\!-\!\!>\!\!>\!\!>\!\!=} \equiv \mathbf{maybe\!\!\!-\!\!>\!\!>\!\!>\!\!=}</math> |

|

with definition |

with definition |

||

| − | + | : <math>\mathbf{A\!\!\!-\!\!>\!\!>\!\!>\!\!=} \equiv \mathbf{list\!\!\!-\!\!>\!\!>\!\!>\!\!=} |

|

</math> |

</math> |

||

| + | == Self-replication, quines, reflective programming == |

||

| − | = Illative Combinatory Logic = |

||

| + | === Background === |

||

| + | David Madore's [http://www.madore.org/~david/computers/quine.html Quines (self-replicating programs)] and [http://www.ipl.t.u-tokyo.ac.jp/~scm/ Shin-Cheng Mu's many writings], including a [http://www.ipl.t.u-tokyo.ac.jp/~scm/quine.hs Haskell quine] give us wonderful insights on mathematical logic, programming, self-reference. |

||

| − | [http://citeseer.ist.psu.edu/246934.html Systems of Illative Combinatory Logic complete for first-order propositional and predicate calculus] by Henk Barendregt, Martin Bunder, Wil Dekkers. |

||

| + | [http://en.wikipedia.org/wiki/Quine Wikipedia's quine page] and John Bethencourt's [http://www.upl.cs.wisc.edu/~bethenco/quines/ quine quine]. |

||

| + | See also the writings of Raymond Smullyan, Hofstadter, [http://www.cogs.indiana.edu/people/homepages/hofstadter.html also his current research project on a self-watching cognitive architecture], Manfred Eigen and Ruthild Winkler: Laws of the Game / How the Principles of Nature Govern Chance, and [http://homepage.univie.ac.at/Karl.Sigmund/ Karl Sigmund]'s Games of Life, and Reflective programming (see [http://www2.parc.com/csl/groups/sda/projects/reflection96/ Reflection '96] and P. Maes & D. Nardi: Meta-Level Architectures and Reflection). [http://www.cs.auckland.ac.nz/CDMTCS/chaitin/ G.J. Chaitin] especially his [http://www.cs.auckland.ac.nz/CDMTCS/chaitin/italy.html Understandable Papers on Incompleteness], especially [http://www.cs.auckland.ac.nz/CDMTCS/chaitin/unknowable/index.html The Unknowable] (the book ''is'' available on this page, just roll the page bellow that big colored photos). |

||

| + | The book begins with the limits of mathematics: Gödel's undecidable, Turing's uncompatiblity, Chaitin's randomness); ''but'' (or exactly ''that's why''?) it ends with writing on the future and beuty of science. |

||

| + | I must read Autopoesis and The Tree of Knowledge carefully from Maturana and Varela to say if their topics are releted to here. See |

||

| − | I think combinator '''G''' can be thought of as something analogous to [[Dependent types]]: |

||

| + | * [http://www.shambhala.com/html/catalog/items/isbn/0-87773-642-1.cfm?selectedText=WEB_TOC table of contents in English] |

||

| + | * annotations |

||

| + | ** [http://www.ibiblio.org/hhalpin/homepage/notes/auto.html on Autopoesis (in English)] |

||

| + | ** [http://www.kia.hu/konyvtar/szemle/438.htm on The Tree of Knowledge (in Hungarian)]. To summarize this annotation by citing its main thought: The intelligence is not a mere map from the outer world: but it is a continuous world-creating, and there are as many worlds as many minds. |

||

| + | |||

| + | === Self-replication === |

||

| + | |||

| + | Quines: the idea of self-replication can be conveyed by the concept of a program, which is able to print its own list. |

||

| + | But pure <math>\lambda</math>-calculus and combinatory logic does not know any notion of ''printing''! We should like the capture the ''essence'' of self-replication, without resorting to the imperative world. |

||

| + | |||

| + | ==== Representation, qoutation -- the DNA ==== |

||

| + | |||

| + | Let us introduce the concept of representing combinatory logic terms. How could we do that? For example, by binary trees. The leaves should represent the base combinators, and the branches mean application. |

||

| + | |||

| + | And how to represent combintory logic terms -- in combinatory logic itself? The first thought could be, that it is not a problem. Each combinatory logic term could be represented by itself. |

||

| + | |||

| + | Sometimes this idea works. The huge power of higher order functions is exactly in being able to treat datas programs and vice versa. Sometimes we are enabled to do things, which could be done in other languages only by carefully designing a representation, a specific language. |

||

| + | |||

| + | But sometimes, representing CL terms by themselves is not enough. Let us imagine a tutoring program! Let the topic be combinatory logic, the language of implementation -- combinatory logic, too. How should the tutoring program ask the pupil questions like: |

||

| + | : Tell me if the following two expresions have the same normal form: |

||

| + | : <math>\mathbf K\;\mathbf{24}\;\mathbf{48}</math> |

||

| + | : <math>\mathbf{24}</math> |

||

| + | |||

| + | The problem is that our program is simply ''unable'' to distinguish between CL terms which have the same normal form (in fact, equivalence cannot be defined generally either). If we represent CL terms by themselves, we simply ''loose'' a lot of information, including loosing any possibility to make distinctions between equivalent terms. |

||

| + | |||

| + | We see that there is something that relates to make a distinction between target language and metalanguage (See Imre Ruzsa, or Haskell B. Curry) |

||

| + | |||

| + | In this example, the distinction is: |

||

| + | * We deal with combinatory logic expressions because our program has to teach them: it is related to it just like a vocabulary program is related to English. |

||

| + | * But we deal with programming logic expressions because our program is implemented in them. Just like VIM is related to C++. |

||

| + | |||

| + | We said CL terms are eventually trees. Let us represent them with trees then -- now let us think of trees not as of term trees, but as datatypes which we must construct by hand, in a similar way as we defined Maybes, direct sums, direct products, lists. |

||

| + | ; <math>\mathbf K</math> |

||

| + | : <math>\mathbf{leaf}\;\mathbf{true}</math> |

||

| + | ; <math>\mathbf S</math> |

||

| + | : <math>\mathbf{leaf}\;\mathbf{false}</math> |

||

| + | ; <math>\left(a\;b\right)</math> |

||

| + | : <math>\mathbf{branch}\;\alpha\;\beta</math> |

||

| + | where let <math>\alpha</math> denote the representation of <math>a</math> and <math>\beta</math> that of <math>b</math> |

||

| + | |||

| + | Let us make a distinction between term trees and datatype trees. A Haskell example: |

||

| + | * many Haskell expressions can be regarded as term trees |

||

| + | * but only special Haskell expressions can be seen as datatype trees: those who are constructed from <hask>Branch</hask> and <hask>Leaf</hask> in an appropriate way |

||

| + | |||

| + | Similarly, |

||

| + | * ''all'' CL expressions can be regarded as term trees. |

||

| + | * but CL expressions which can be revered as datatype trees must obey a huge amount of constraints: they may consist only of subexpressions <math>\mathbf{leaf}</math>, <math>\mathbf{branch}</math>, <math>\mathbf{true}</math>, <math>\mathbf{false}</math> subexpressions in an approporiate way. |

||

| + | |||

| + | (In fact, all CL expressions can be regarded as datatype trees too: CL is a total thing, we can us each CL expression in a same way as a datatype tree: we can apply it leaf- and branch-continuation arguments. ''Something'' will always happen. At worst it will diverge -- but lazy trees can diverge too, amd they are inarguably datatype trees. But now let us ignore all these facts, and let us define the notion of quotations in the restictive way: let the definition require to be structured ''in a predefined way''.) |

||

| + | |||

| + | We use datatype trees for representing other expressions. Let us call CL expressions which can represent (another CL expreesion) ''quotation''s. |

||

| + | Quotations are exactly the datatype trees, but |

||

| + | * the world <math>quotation</math> refers to their function, |

||

| + | * the world ''datatype tree'' refers to their implemetation, structure |

||

| + | |||

| + | This means a datatype tree |

||

| + | * is not only a tree regarded only as a term tree, |

||

| + | * but on a higher level: itself a recursive datatype implemented in CL, it is appropiately consisting of <math>\mathbf{leaf}</math>,<math>\mathbf{branch}</math> and <math>\mathbf{true}</math>, <math>\mathbf{false}</math> subexpressions so that we can reason about it in CL itself |

||

| + | |||

| + | How do quotations relate to all CL expressions? |

||

| + | * In one direction, informally, we could say, quotations make a very proper subset of all CL expressions (attention: cardinality is the same!). Not every CL expressions are datatype trees. |

||

| + | * But the reverse is not true: all CL expressions can be quoted! Foreach CL expressionther is a (unique) CL expression who quotes it! |

||

| + | We can define a quote function on the set of all CL expressions. |

||

| + | But of it is an conceptually ''outside'' function, not a CL combinator itself! |

||

| + | (that is why I do not typest it boldface. Is it an example of what Curry called epitheory?). |

||

| + | |||

| + | After having solved the representation (quoting) problem, we can do many things. We can define meta-concepts, e.g. |

||

| + | ; <math>\equiv</math> (the notion of ''same terms'') |

||

| + | : by bool tree equality |

||

| + | ; <math>=</math> (equivalence made by ''reduction'') |

||

| + | : by building a metacircular interpreter |

||

| + | We can write our tutor program too. But let us discuss more clean and theoretical questions. |

||

| + | |||

| + | ==== Concept of self-replication generalized -- pure functional quines ==== |

||

| + | |||

| + | How can be the concept of quine transferred to combinatory logic? |

||

| + | In the bellow definition, let us think of |

||

| + | * <math>A</math>'s as ''actions'', programs |

||

| + | * and <math>Q</math>'s as ''quotations'', representations |

||

| + | |||

| + | {| |

||

| + | | A quine is a CL term <math>A</math> |

||

| + | | this means quines are pure CL concepts, no imperative compromises |

||

| + | |- |

||

| + | | for whose normal form <math>A_0</math> |

||

| + | | this means quines are <math>run</math> |

||

| + | |- |

||

| + | | there exists an equivalent CL-term <math>Q</math> where |

||

| + | | datatypes in CL arealmost never defined in their normal form (not even ordered pairs are!). They save us from loosing information, but they almost never do that literary. I faced this as problems in nice rewritings when I wanted to implement CL with computer algebra services |

||

| + | |- |

||

| + | | <math>Q</math> is a quotation, |

||

| + | | which manifests itself in the fact that <math>Q</math> is a datatype tree (not only term tree) with boolean leafs, |

||

| + | |- |

||

| + | | <math>Q</math> quotes <math>A</math> |

||

| + | | and <math>Q</math> is exactly the representation of <math>A</math> |

||

| + | |} |

||

| + | |||

| + | So a quine is a program which is run, then rewrited as a quotation and so we get the representation of the original program. |

||

| + | |||

| + | Of course the first three requirements can be contracted in two. |

||

| + | Thus, a quine is a CL-term which is equivalent to its own representation (if we mean representation as treated here). |

||

| + | |||

| + | === A metacircular interpeter === |

||

| + | We have seen that we can represent CL expressions in CL itself, which enables us to do some meta things (see the into of this section, especially Reflective programming, e.g. [http://www2.parc.com/csl/groups/sda/projects/reflection96/ Reflection '96]). |

||

| + | The first idea could be: to implement CL in itself! |

||

| + | |||

| + | ==== Implementing lazy evaluation ==== |

||

| + | The most important subtask to achieve this goal is to implement the algorithm of lazy evaluation. |

||

| + | I confess I simply lack almost any knowledge on algorithms for implementing lazy evaluation. In my Haskell programs, when they must implement lazy evaluation, I use the following hand-made algorithm. |

||

| + | |||

| + | Functions of increasing number of arguments pass the term tree to each other during analyzing it deaper and deaper. The functions are <hask>eval</hask>, <hask>apply</hask>, <hask>curry</hask> and <hask>lazy</hask>, but I renamed <hask>curry</hask>, because there is also a Prelude function (and a whole concept behind it) with the same name. So I chose Schönfinkel's name for naming the third function in this scheme -- it can be justified by the fact that [http://www.csse.monash.edu.au/~lloyd/tildeProgLang/Curried/ Curry himself attributed the idea of currying to Moses Schönfinkel] (but the idea is [http://www.andrew.cmu.edu/user/cebrown/notes/vonHeijenoort.html#Schonfinkel anticipated by Frege too]). |

||

| + | ---- |

||

| + | <haskell> |

||

| + | module Reduce where |

||

| + | |||

| + | import Term |

||

| + | import Tree |

||

| + | import BaseSym |

||

| + | |||

| + | eval :: Term -> Term |

||

| + | eval (Branch function argument) = apply function argument |

||

| + | eval atom = atom |

||

| + | |||

| + | apply :: Term -> Term -> Term |

||

| + | apply (Branch f a) b = schonfinkel f a b |

||

| + | apply atom argument = strictApply atom argument |

||

| + | |||

| + | schonfinkel :: Term -> Term -> Term -> Term |

||

| + | schonfinkel (Leaf K) f x = eval f |

||

| + | schonfinkel (Branch f a) b c = lazy f a b c |

||

| + | schonfinkel s a b = strictSchonfinkel s a b |

||

| + | |||

| + | lazy :: Term -> Term -> Term -> Term -> Term |

||

| + | lazy (Leaf S) c f x = schonfinkel c x (Branch f x) |

||

| + | lazy k_or_compound x y z = schonfinkel k_or_compound x y `apply` z |

||

| + | |||

| + | strictApply :: Term -> Term -> Term |

||

| + | strictApply f a = f `Branch` eval a |

||

| + | |||

| + | strictSchonfinkel :: Term -> Term -> Term -> Term |

||

| + | strictSchonfinkel f a b = strictApply f a `strictApply` b |

||

| + | </haskell> |

||

| + | ---- |

||

| + | <haskell> |

||

| + | module Term where |

||

| + | |||

| + | import BaseSym |

||

| + | import Tree |

||

| + | |||

| + | type Term = Tree BaseSym |

||

| + | type TermV = Tree (Either BaseSym Var) |

||

| + | </haskell> |

||

| + | ---- |

||

| + | <haskell> |

||

| + | module Tree where |

||

| + | |||

| + | data Tree a = Leaf a | Branch (Tree a) (Tree a) |

||

| + | </haskell> |

||

| + | ---- |

||

| + | <haskell> |

||

| + | module BaseSym where |

||

| + | |||

| + | data BaseSym = K | S |

||

| + | type Var = String |

||

| + | </haskell> |

||

| + | ---- |

||

| + | and it seems hard to me hard to implement in CL. |

||

| + | Almost all of these functions are mutual recursive definitions, and it looks hard for me to formulate the fixpont. |

||

| + | Of coure I could find another algorithm. The main problem is that reducing CL trees is not so simple: the <math>\mathbf S</math> rule requires lookahead in 2 levels. Maybe once I find another one with monads, arrows, or [[attribute grammar]]s... |

||

| + | |||

| + | == A lightweight CL implementation == |

||

| + | |||

| + | I mean |

||

| + | * instead of writing a CL interpreter (or compiler) with a huge amount of command-line arguments and colon-escaped prompt commands, |

||

| + | * why not to write a lightweight library? (Lightweight in the sense as Parsec is lightweight in comparison to parser generators, or QuickCheck is lightweight). |

||

| + | This latter framework can contain the previous one e.g. by implementing |

||

| + | <haskell> |

||

| + | interpreter :: IO () |

||

| + | compiler :: Term -> Haskell |

||

| + | </haskell> |

||

| + | where <hask>Haskell</hask> can be a string, an appropriately designed abstract datatype, a rose tree or a Template Haskell term representation of Haskell expressions, thus any way used in Haskell metaprogramming (in the picture below, does it correspond to <math>Term_{-\infty}</math>?). |

||

| + | |||

| + | So, the latter can contain the former, but what are the new advantages? |

||

| + | <haskell> |

||

| + | abstract :: Var -> TermV -> Maybe Term |

||

| + | abstractV :: Var -> TermV -> TermV |

||

| + | rewrite :: Term -> Reader Definitions (Tree (Either NonVar Definiendum)) |

||

| + | </haskell> |

||

| + | where <hask>type Definitions = Map Definiendum Term</hask>. |

||

| + | So we can get compiler algebra and other useful services in a modular way. |

||

| + | |||

| + | Of course also an interpreter can yield useful services, but as the user interface grows, it develops to have a command language, which is in most cases imperative having less gluing possibilities than being able to use Haskell itself by this lightweight approach. |

||

| + | |||

| + | In fact, it the was the quines (mentioned above) that forced me to think of a lightweight CL library instead of an interpreter. Writing a CL quine (in the way I can do) requires a lot of <math>\mathrm{quote}\!/\!_0</math>: ''quoting CL terms in CL itself''. But quoting CL cannot be done in CL (only <math>\mathrm{quote}\!/\!_1</math>: quoting-the-quotation-of-CL-further can be done in CL), so my CL quine plans needed a lot of work quoting CL terms by hand. A lightweight CL library could do this job by using the power of Haskell (quoting CL terms can be done in Haskell, or more generally said: in the ultimate implementating language of this CL-project). |

||

| + | |||

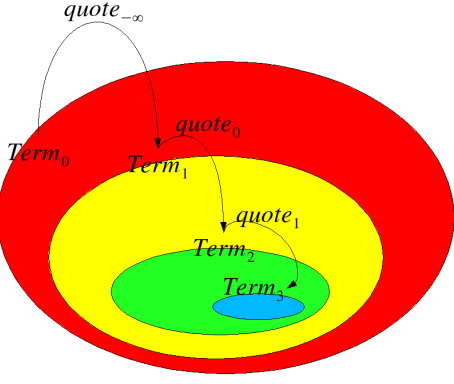

| + | [[Image:quot-strat.png|The quotation stratification of subsequent series of meta- and object languages]] |

||

| + | |||

| + | The quotation stratification of subsequent series of meta- and object languages: |

||

| + | :<math>\mathrm{Term}_0\supset\mathrm{Term}_1\supset\mathrm{Term}_2\supset\dots</math> |

||

| + | ;<math>\mathrm{Term}_0</math>: |

||

| + | :the ultimate implementing language of thus CL project (here Haskell) |

||

| + | ;<math>\mathrm{Term}_1</math>: |

||

| + | :combinatory logic, its terms being represented by ''Haskell'' binary tree abstract datatype. |

||

| + | ;<math>\mathrm{Term}_2</math>: |

||

| + | :combinatory logic, its terms being represented by ''combinatory logic'' binary tree abstract datatype. We have already seen that we can define lists in combinatory logic by catamorhisms, so we can define binary trees too. And we can represent the base combinators by booleans which can be defined too in CL. So we can ''quote'' each CL term in CL itself. |

||

| + | ;<math>\mathrm{Term}_2</math>: |

||

| + | :... |

||

| + | |||

| + | Quoting: |

||

| + | {| cellspacing=7 cellpadding=7 |

||

| + | | <math>\mathrm{quote}/_0\in\mathrm{Term}_0</math> |

||

| + | | <math>\mathrm{quote}/_0:\mathrm{Term}_1\to\mathrm{Term}_2</math> |

||

| + | |- |

||

| + | | <math>\mathrm{quote}/_1\in\mathrm{Term}_1</math> |

||

| + | | <math>\mathrm{quote}/_1:\mathrm{Term}_2\to\mathrm{Term}_3</math> |

||

| + | |} |

||

| + | Invoking: |

||

| + | {| cellspacing=7 cellpadding=7 |

||

| + | | <math>\mathrm{invoke}/_1\in\mathrm{Term}_1</math> |

||

| + | | <math>\mathrm{invoke}/_1:\mathrm{Term}_2\to\mathrm{Term}_1</math> |

||

| + | |} |

||

| + | <haskell> |

||

| + | -- Yet we do not require type safety in quotation stratification of CL terms: |

||

| + | type Term1 = Term |

||

| + | type Term2 = Term |

||

| + | type Term3 = Term |

||

| + | -- ... |

||

| + | quote'0 :: Term1 -> Term2 |

||

| + | quote'1_0 :: Term2 -> Term3 |

||

| + | -- ... |

||

| + | -- At or beyond the depth of quote'1 |

||

| + | -- these functions can be implemented in CL too |

||

| + | -- more generally: everyhing can be made at a higher level too, |

||

| + | -- but not vice versa. So everyhing has a grad |

||

| + | -- We index things by this grad |

||

| + | -- Ultimate implementing language (here:Haskell) is designed by grad 0 |

||

| + | -- Unavailable grad is designed by minus infinity |

||

| + | -- (see the analogy of grad of polinomials) |

||

| + | quote'1 :: Term1 |

||

| + | quote'2_1 :: Term1 |

||

| + | quote'2 :: Term2 |

||

| + | </haskell> |

||

| + | == Illative combinatory logic == |

||

| + | |||

| + | * Jonathan P. Seldin: [http://people.uleth.ca/~jonathan.seldin/CAT.pdf Curry’s anticipation of the types used in programming languages] |

||

| + | * Jonathan P. Seldin: [http://people.uleth.ca/~jonathan.seldin/CCL.pdf The Logic of Curry and Church] (it is also an introduction to illative combinatory logic) |

||

| + | |||

| + | * Henk Barendregt, Martin Bunder, Wil Dekkers: [http://citeseer.ist.psu.edu/246934.html Systems of Illative Combinatory Logic complete for first-order propositional and predicate calculus]. |

||

| + | |||

| + | I think combinator <math>\mathbf G</math> can be thought of as something analogous to [[Dependent type]]: |

||

it seems to me that the dependent type construct |

it seems to me that the dependent type construct |

||

<math>\forall x : S \Rightarrow T</math> |

<math>\forall x : S \Rightarrow T</math> |

||

| Line 205: | Line 622: | ||

<math>\mathbf G\;S\;(\lambda x . T)</math> |

<math>\mathbf G\;S\;(\lambda x . T)</math> |

||

in Illative Combinatory Logic. I think e.g. the followings should correspond to each other: |

in Illative Combinatory Logic. I think e.g. the followings should correspond to each other: |

||

| − | + | : <math>\mathbf{realNullvector} :\;\;\;\forall n: \mathbf{Nat} \Rightarrow \mathbf{RealVector}\;n</math> |

|

| − | + | : <math>\mathbf G\;\,\mathbf{Nat}\;\,\mathbf{RealVector}\;\,\mathbf{realNullvector}</math> |

|

| + | |||

| + | |||

| + | My dream is making something in Illative Combinatory Logic. Maybe it could be theoretical base for a functional logic language? |

||

| + | |||

| + | == References == |

||

| + | |||

| + | * [Bun:NatICL] Martin W. Bunder: The naturalness of Illative Combinatory Logic as a Basis for Mathematics, see in ''Dedicated to H.B.Curry on the occasion of his 80th birthday''. |

||

| + | [[Category:Theoretical foundations]] |

||

| − | My dream is making something in Illative Combinatory Logic. Maybe it could be theroretical base for a functional logic language? |

||

Latest revision as of 00:32, 15 July 2011

General

Although combinatory logic has precursors, it was Moses Schönfinkel who first explored combinatory logic as such. Later the work was continued by Haskell B. Curry. Combinatory logic was developed as a theory for the foundation of mathematics [Bun:NatICL], and it has relevance in linguistics too.

Its goal was to understand paradoxes better, and to establish fundamental mathematical concepts on simpler and cleaner principles than the existing mathematical frameworks, especially to understand better the concept of substitution. Its “lack of (bound) variables” relates combinatory logic to the pointfree style of programming. (For contrast, see a very different approach which also enables full elimination of variables: recursive function theory)

General materials:

- Jonathan P. Seldin: Curry’s anticipation of the types used in programming languages (it is also an introduction to illative combinatory logic)

- Jonathan P. Seldin: The Logic of Curry and Church (it is also an introduction to illative combinatory logic)

- Henk Barendregt: The Impact of the Lambda-Calculus in Logic and Computer Science (The Bulletin of Symbolic Logic Volume 3, Number 2, June 1997). Besides theoretical relevance, the article provides implementations of recursive datatypes in CL

- To Dissect a Mockingbird and also Re: category theory <-> lambda calculus?, found on a Lambda the Ultimate site. The links to the To Dissect a Mockingbird site given by these pages seem to be broken, but I found a new one (so the link given here is correct).

Portals and other large-scale resources

Applications

Of course combinatory logic has significance in the foundations of mathematics, or in functional programming, computer science. For example, see Chaitin's construction.

It is interesting that it can be important also in some linguistical theories. See especially the theory of applicative universal grammar, it uses many important thoughts from combinatory logic.

Lojban is an artificial language (and, unlike the more a posteriori Esperanto, it is rather of an a priori taste). It is a human language, capable of expressing everything. Its grammar uses (among others) things taken from mathematical logic, e.g. predicate-like structures. Although its does not make use combinatory logic directly (even, from a category logic / functional programming point of view, it uses also rather imperative ideas), but it may give hints and analogies, how combinatry logic can be useful in linguistics.

Implementing CL

- Talks about it at haskell-cafe haskell-cafe

- Lot of interpreters at John's Lambda Calculus and Combinatory Logic Playground.

- Unlambda resources concerning David Madore's combinatory logic programming language Unlambda

- an implementation of Unlambda in Haskell

- another implementation of Unlambda in Haskell, for use by Lambdabot

- an Unlambda metacircular interpeter

- CL++, a lazy-evaluating combinatory logic interpreter with some computer algebra service: e.g. it can reply the question with instead of a huge amount of parantheses and , combinators. Unfortunately I have not written it directly in English, so all documentations, source code and libraries are in Hungarian. I want to rewrite it using more advanced Haskell programming concepts (e.g. monads or attribute grammars) and directly in English.

Base

Some thoughts on base combinators and on the relatedness of their rules to other topics

- Conal Elliott's reply to thread zips and maps

- Intuitionistic fragment of propositional logic

- Records in function: in set theory and database theory, we regard functions as consisting of more elementary parts, records: a function can be regarded as the set of all its records. A record is a pair of a key and its value, and for functions we expect unicity (and sometimes stress this requirement by writing instead of ).Sometimes I think of as having a taste of record selection: selects a record determinated by key in function (as in a database), and returns the found record (i.e. corresponding key and value) contained in the container (continuation). Is this thought just a toy or can it be brought further? Does it explain why and can constitute a base?

- Also bracket abstraction gives us a natural way to understand the seemingly rather unintuitive and artificial combinator better

Programming in CL

I think many thoughts from John Hughes' Why Functional Programming Matters can be applied to programming in Combinatory Logic. And almost all concepts used in the Haskell world (catamorphisms etc.) help us a lot here too. Combinatory logic is a powerful and concise programming language. I wonder how functional logic programming could be done by using the concepts of Illative combinatory logic, too.

Datatypes

Continuation passing for polynomial datatypes

Direct product

Let us begin with a notion of the ordered pair and denote it by . We know this construct well when defining operations for booleans

and Church numbers. I think, in generally, when defining datatypes in a continuation-passing way (e.g. Maybe or direct sum), then operations on so-defined datatypes often turn to be well-definable by some .

We define it with

in -calculus and

in combinatory logic.

A nice generalization scheme:

- as the construct can be generalized to any natural number (the concept of -tuple, see Barendregt's Calculus)

- and in this generalized scheme corresponds to the 0 case, to the 1 case, and the ordered pair construct to the 2 case, as though defining

so we can write definition

or the same

in a more interesting way:

Is this generalizable? I do not know. I know an analogy in the case of , , , .

Direct sum

The notion of ordered pair mentioned above really enables us to deal with direct products. What about it dual concept? How to make direct sums in Combinatory Logic? And after we have implemented it, how can we see that it is really a dual concept of direct product?

A nice argument described in David Madore's Unlambda page gives us a continuation-passig style like solution. We expect reductions like

so we define

now we translate it from -calculus into combinatory logic:

Of course, we can recognize Haskell's direct sum construct

Either (Left, Right)

implemented in an analogous way.

Maybe

Let us remember Haskell's maybe:

maybe :: a' -> (a -> a') -> Maybe a -> a'

maybe n j Nothing = n

maybe n j (Just x) = j x

thinking of

- n as nothing-continuation

- j as just-continuation

In a continuation passing style approach, if we want to implement something like the Maybe constuct in -calculus, then we may expect the following reductions:

we know both of them well, one is just , and we remember the other too from the direct sum:

thus their definition is

where both and have a common definition.

Maybe2

Haskell:

module Maybe2 (Maybe2, maybe2, nothing2, just2) where

data Maybe2 a b = Nothing2 | Just2 a b

maybe2 :: maybe2ab' -> (a -> b -> maybe2ab') -> Maybe2 a b -> maybe2ab'

maybe2 nothing2Cont _ Nothing2 = nothing2Cont

maybe2 _ just2Cont (Just2 a b) = just2Cont a b

nothing2 :: Maybe2 a b

nothing2 = Nothing2

just2 :: a -> b -> Maybe2 a b

just2 = Just2

Expected reductions:

Other argument orders are possible, too, but they lead to more complicated solutions than the following:

Combinators:

Conjecture for generalisation:

with straightforward generalisations, e.g.

Catamorphisms for recursive datatypes

List

Let us define the concept of list by its catamorphism (see Haskell's foldrfunction):

a list (each concrete list) is a function taking two arguments

- a two-parameter function argument (cons-continuation)

- a zero-parameter function argument (nil-continuation)

and returns a value coming from a term consisting of applying cons-continuations and nil-continuations in the same shape as the correspondig list. E. g. in case of having defined

the expression

reduces to

But how to define and ? In -calculus, we should like to see the following reductions:

Let us think of the variables as denoting head, denoting tail, denoting cons-continuation, and denoting nil-continuation.

Thus, we could achieve this goal with the following definitions:

Using the formulating combinators described in Haskell B. Curry's Combinatory Logic I, we can translate these definitions into combinatory logic without any pain:

Of course we could use the two parameters in the opposite order, but I am not sure yet that it would provide a more easy way.

A little practice: let us define concat. In Haskell, we can do that by

concat = foldr (++) []

which corresponds in cominatory logic to reducing

Let us use the ordered pair (direct product) construct:

and if I use that nasty (see later)

Monads in Combinatory Logic?

Concrete monads

Maybe as a monad

return

Implementing the return monadic method for the Maybe monad is rather straightforward, both in Haskell and CL:

instance Monad Maybe

return = Just

...

in Haskell and

in combinatory logic.

map

Haskell:

instance Functor Maybe where

map f = maybe Nothing (Just . f)

-calculus: Expected reductions:

Definition:

Combinatory logic: we expect the same reduction here too

let us get rid of one parameter:

now we have the definition:

bind

Haskell:

instance Monad Maybe (>>=) where

(>>=) f p = maybe Nothing f

-calculus: we expect

achieved by defintion

In combinatory logic the above expected reduction

getting rid of the outest parameter

yielding definition

and of course

But the other way (starting with a better chosen parameter order) is much better:

yielding the much simplier and more efficient definition:

We know already that can be seen as as a member of the scheme of tuples: for case. As the tupe construction is a usual guest at things like this (we shall meet it at list and other maybe-operations like ), so us express the above definition with denoted as :

hoping that this will enable us some interesting generalization in the future.

But why we have not made a more brave generalization, and express monadic bind from monadic join and map? Later in the list monad, we shall see that it may be better to avoid this for sake of deforestation. Here a maybe similar problem will appear: the problem of superfluous .

join

We should think of changing the architecture if we suspect that we could avoid and solve the problem with a more simple construct.

The list as a monad

Let us think of our list-operations as implementing monadic methods of the list monad. We can express this by definitions too, e.g.

we could name

Now let us see mapping a list, concatenating a list, binding a list. Mapping and binding have a common property: yielding nil for nil. I shall say these operations are centred: their definition would contain a subexpression. Thus I shall give a name to this subexpression:

Now let us define map and bind for lists:

now we see it was worth of defining a common . But to tell the truth, it may be a trap. breaks a symmetry: we should always define the cons and nil part of the foldr construct on the same level, always together. Modularization should be pointed towards this direction, and not to run forward into the T-street of .

Another remark: of course we can get the monadic bind for lists

But we used here. How do we define it? It is surprisingly simple. Let us think how we would define it in Haskell by foldr function, if it was not defined already as ++ defined in Prelude:

In defining

(++) list1 list2

we can do it by foldr:

(++) [] list2 = list2

(++) (a : as) list2 = a : (++) as list2

thus

(++) list1 list2 = foldr (:) list2 list1

let us se how we should reduce its corresponding expression in Combinatory Logic:

thus

Thus, we have defined monadic bind for lists. I shall call this the deforested bind for lists. Of course, we could define it another way too: by concat and map, which corresponds to defining monadic bind from monadic map and monadic join. But I think this way forces my CL-interpreter to manage temporary lists, so I gave rather the deforested definition.

Defining the other monadic operation: return for lists is easy:

instance Monad [] where

return = (: [])

in Haskell -- we know,

(: [])

translates to

return = flip (:) []

so we can see how to do it in combinatory logic:

How to AOP with monads in Combinatory Logic?

We have defined monadic list in CL. Of course we can make monadic Maybe, binary tree, Error monad with direct sum constructs...

But separation of concerns by monads is more than having a bunch of special monads. It requires other possibilities too: e.g. being able to use monads generally, which can become any concrete mondads.

Of course my simple CL interpreter does not know anything on type classes, overloading. But there is a rather restricted andstatic possibility provided by the concept of definition itself:

and later we can change the binding mode named A e.g. from a failure-handling Maybe-like one to a more general indeterminism-handling list-like one, then we can do that simply by replacing definition

with definition

Self-replication, quines, reflective programming

Background

David Madore's Quines (self-replicating programs) and Shin-Cheng Mu's many writings, including a Haskell quine give us wonderful insights on mathematical logic, programming, self-reference. Wikipedia's quine page and John Bethencourt's quine quine. See also the writings of Raymond Smullyan, Hofstadter, also his current research project on a self-watching cognitive architecture, Manfred Eigen and Ruthild Winkler: Laws of the Game / How the Principles of Nature Govern Chance, and Karl Sigmund's Games of Life, and Reflective programming (see Reflection '96 and P. Maes & D. Nardi: Meta-Level Architectures and Reflection). G.J. Chaitin especially his Understandable Papers on Incompleteness, especially The Unknowable (the book is available on this page, just roll the page bellow that big colored photos). The book begins with the limits of mathematics: Gödel's undecidable, Turing's uncompatiblity, Chaitin's randomness); but (or exactly that's why?) it ends with writing on the future and beuty of science.

I must read Autopoesis and The Tree of Knowledge carefully from Maturana and Varela to say if their topics are releted to here. See

- table of contents in English

- annotations

- on Autopoesis (in English)

- on The Tree of Knowledge (in Hungarian). To summarize this annotation by citing its main thought: The intelligence is not a mere map from the outer world: but it is a continuous world-creating, and there are as many worlds as many minds.

Self-replication

Quines: the idea of self-replication can be conveyed by the concept of a program, which is able to print its own list. But pure -calculus and combinatory logic does not know any notion of printing! We should like the capture the essence of self-replication, without resorting to the imperative world.

Representation, qoutation -- the DNA

Let us introduce the concept of representing combinatory logic terms. How could we do that? For example, by binary trees. The leaves should represent the base combinators, and the branches mean application.

And how to represent combintory logic terms -- in combinatory logic itself? The first thought could be, that it is not a problem. Each combinatory logic term could be represented by itself.

Sometimes this idea works. The huge power of higher order functions is exactly in being able to treat datas programs and vice versa. Sometimes we are enabled to do things, which could be done in other languages only by carefully designing a representation, a specific language.

But sometimes, representing CL terms by themselves is not enough. Let us imagine a tutoring program! Let the topic be combinatory logic, the language of implementation -- combinatory logic, too. How should the tutoring program ask the pupil questions like:

- Tell me if the following two expresions have the same normal form:

The problem is that our program is simply unable to distinguish between CL terms which have the same normal form (in fact, equivalence cannot be defined generally either). If we represent CL terms by themselves, we simply loose a lot of information, including loosing any possibility to make distinctions between equivalent terms.

We see that there is something that relates to make a distinction between target language and metalanguage (See Imre Ruzsa, or Haskell B. Curry)

In this example, the distinction is:

- We deal with combinatory logic expressions because our program has to teach them: it is related to it just like a vocabulary program is related to English.

- But we deal with programming logic expressions because our program is implemented in them. Just like VIM is related to C++.

We said CL terms are eventually trees. Let us represent them with trees then -- now let us think of trees not as of term trees, but as datatypes which we must construct by hand, in a similar way as we defined Maybes, direct sums, direct products, lists.

where let denote the representation of and that of

Let us make a distinction between term trees and datatype trees. A Haskell example:

- many Haskell expressions can be regarded as term trees

- but only special Haskell expressions can be seen as datatype trees: those who are constructed from

BranchandLeafin an appropriate way

Similarly,

- all CL expressions can be regarded as term trees.

- but CL expressions which can be revered as datatype trees must obey a huge amount of constraints: they may consist only of subexpressions , , , subexpressions in an approporiate way.