Difference between revisions of "Typeclassopedia"

(→Further reading: move further reading re: composing monads to monad transformers section) |

m (the definition of sconcat did not reflect that of the source or a syntactically correct one (sconcat = sconcat (a :|as) = go ...)) |

||

| (225 intermediate revisions by 29 users not shown) | |||

| Line 1: | Line 1: | ||

| − | ''By [[User:Byorgey|Brent Yorgey]], byorgey@ |

+ | ''By [[User:Byorgey|Brent Yorgey]], byorgey@gmail.com'' |

''Originally published 12 March 2009 in [http://www.haskell.org/wikiupload/8/85/TMR-Issue13.pdf issue 13] of [http://themonadreader.wordpress.com/ the Monad.Reader]. Ported to the Haskell wiki in November 2011 by [[User:Geheimdienst|Geheimdienst]].'' |

''Originally published 12 March 2009 in [http://www.haskell.org/wikiupload/8/85/TMR-Issue13.pdf issue 13] of [http://themonadreader.wordpress.com/ the Monad.Reader]. Ported to the Haskell wiki in November 2011 by [[User:Geheimdienst|Geheimdienst]].'' |

||

| Line 18: | Line 18: | ||

* I finally figured out how to use [[Parsec]] with do-notation, and someone told me I should use something called <code>Applicative</code> instead. Um, what? |

* I finally figured out how to use [[Parsec]] with do-notation, and someone told me I should use something called <code>Applicative</code> instead. Um, what? |

||

| − | * Someone in the [[IRC channel|#haskell]] IRC channel used <code>(***)</code>, and when I asked |

+ | * Someone in the [[IRC channel|#haskell]] IRC channel used <code>(***)</code>, and when I asked Lambdabot to tell me its type, it printed out scary gobbledygook that didn’t even fit on one line! Then someone used <code>fmap fmap fmap</code> and my brain exploded. |

* When I asked how to do something I thought was really complicated, people started typing things like <code>zip.ap fmap.(id &&& wtf)</code> and the scary thing is that they worked! Anyway, I think those people must actually be robots because there’s no way anyone could come up with that in two seconds off the top of their head. |

* When I asked how to do something I thought was really complicated, people started typing things like <code>zip.ap fmap.(id &&& wtf)</code> and the scary thing is that they worked! Anyway, I think those people must actually be robots because there’s no way anyone could come up with that in two seconds off the top of their head. |

||

| Line 37: | Line 37: | ||

This document can only be a starting point, since good intuition comes from hard work, [http://byorgey.wordpress.com/2009/01/12/abstraction-intuition-and-the-monad-tutorial-fallacy/ not from learning the right metaphor]. Anyone who reads and understands all of it will still have an arduous journey ahead—but sometimes a good starting point makes a big difference. |

This document can only be a starting point, since good intuition comes from hard work, [http://byorgey.wordpress.com/2009/01/12/abstraction-intuition-and-the-monad-tutorial-fallacy/ not from learning the right metaphor]. Anyone who reads and understands all of it will still have an arduous journey ahead—but sometimes a good starting point makes a big difference. |

||

| − | It should be noted that this is not a Haskell tutorial; it is assumed that the reader is already familiar with the basics of Haskell, including the standard |

+ | It should be noted that this is not a Haskell tutorial; it is assumed that the reader is already familiar with the basics of Haskell, including the standard [{{HackageDocs|base|Prelude}} <code>Prelude</code>], the type system, data types, and type classes. |

| − | The type classes we will be discussing and their interrelationships: |

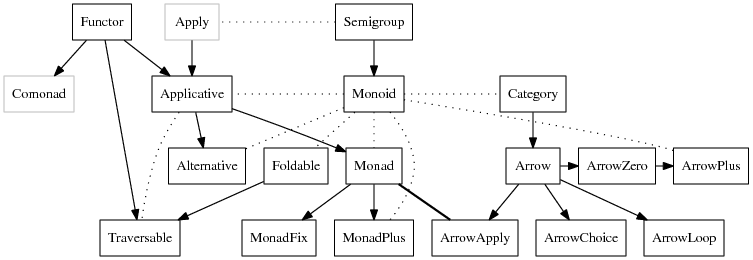

+ | The type classes we will be discussing and their interrelationships ([[:File:Dependencies.txt|source code for this graph can be found here]]): |

[[Image:Typeclassopedia-diagram.png]] |

[[Image:Typeclassopedia-diagram.png]] |

||

| − | {{note|<code> |

+ | {{note|<code>Apply</code> can be found in the [http://hackage.haskell.org/package/semigroupoids <code>semigroupoids</code> package], and <code>Comonad</code> in the [http://hackage.haskell.org/package/comonad <code>comonad</code> package].}} |

* <span style="border-bottom: 2px solid black">Solid arrows</span> point from the general to the specific; that is, if there is an arrow from <code>Foo</code> to <code>Bar</code> it means that every <code>Bar</code> is (or should be, or can be made into) a <code>Foo</code>. |

* <span style="border-bottom: 2px solid black">Solid arrows</span> point from the general to the specific; that is, if there is an arrow from <code>Foo</code> to <code>Bar</code> it means that every <code>Bar</code> is (or should be, or can be made into) a <code>Foo</code>. |

||

| − | * <span style="border-bottom: 2px dotted black">Dotted |

+ | * <span style="border-bottom: 2px dotted black">Dotted lines</span> indicate some other sort of relationship. |

* <code>Monad</code> and <code>ArrowApply</code> are equivalent. |

* <code>Monad</code> and <code>ArrowApply</code> are equivalent. |

||

| − | * |

+ | * <code>Apply</code> and <code>Comonad</code> are greyed out since they are not actually (yet?) in the standard Haskell libraries {{noteref}}. |

| − | One more note before we begin. The original spelling of “type class” is with two words, as evidenced by, for example, the [http://haskell.org/onlinereport/ Haskell |

+ | One more note before we begin. The original spelling of “type class” is with two words, as evidenced by, for example, the [http://www.haskell.org/onlinereport/haskell2010/ Haskell 2010 Language Report], early papers on type classes like [http://citeseer.ist.psu.edu/viewdoc/summary?doi=10.1.1.103.5639 Type classes in Haskell] and [http://research.microsoft.com/en-us/um/people/simonpj/papers/type-class-design-space/ Type classes: exploring the design space], and [http://citeseer.ist.psu.edu/viewdoc/summary?doi=10.1.1.168.4008 Hudak et al.’s history of Haskell]. However, as often happens with two-word phrases that see a lot of use, it has started to show up as one word (“typeclass”) or, rarely, hyphenated (“type-class”). When wearing my prescriptivist hat, I prefer “type class”, but realize (after changing into my descriptivist hat) that there's probably not much I can do about it. |

| − | We now begin with the simplest type class of all: <code>Functor</code>. |

+ | [[Instances of List and Maybe]] illustrates these type classes with simple examples using List and Maybe. We now begin with the simplest type class of all: <code>Functor</code>. |

=Functor= |

=Functor= |

||

| − | The <code>Functor</code> class ([ |

+ | The <code>Functor</code> class ([{{HackageDocs|base|Prelude}}#t:Functor haddock]) is the most basic and ubiquitous type class in the Haskell libraries. A simple intuition is that a <code>Functor</code> represents a “container” of some sort, along with the ability to apply a function uniformly to every element in the container. For example, a list is a container of elements, and we can apply a function to every element of a list, using <code>map</code>. As another example, a binary tree is also a container of elements, and it’s not hard to come up with a way to recursively apply a function to every element in a tree. |

Another intuition is that a <code>Functor</code> represents some sort of “computational context”. This intuition is generally more useful, but is more difficult to explain, precisely because it is so general. Some examples later should help to clarify the <code>Functor</code>-as-context point of view. |

Another intuition is that a <code>Functor</code> represents some sort of “computational context”. This intuition is generally more useful, but is more difficult to explain, precisely because it is so general. Some examples later should help to clarify the <code>Functor</code>-as-context point of view. |

||

| Line 69: | Line 69: | ||

class Functor f where |

class Functor f where |

||

fmap :: (a -> b) -> f a -> f b |

fmap :: (a -> b) -> f a -> f b |

||

| + | |||

| + | (<$) :: a -> f b -> f a |

||

| + | (<$) = fmap . const |

||

</haskell> |

</haskell> |

||

| − | <code>Functor</code> is exported by the <code>Prelude</code>, so no special imports are needed to use it. |

+ | <code>Functor</code> is exported by the <code>Prelude</code>, so no special imports are needed to use it. Note that the <code>(<$)</code> operator is provided for convenience, with a default implementation in terms of <code>fmap</code>; it is included in the class just to give <code>Functor</code> instances the opportunity to provide a more efficient implementation than the default. To understand <code>Functor</code>, then, we really need to understand <code>fmap</code>. |

| − | First, the <code>f a</code> and <code>f b</code> in the type signature for <code>fmap</code> tell us that <code>f</code> isn’t |

+ | First, the <code>f a</code> and <code>f b</code> in the type signature for <code>fmap</code> tell us that <code>f</code> isn’t a concrete type like <code>Int</code>; it is a sort of ''type function'' which takes another type as a parameter. More precisely, the ''kind'' of <code>f</code> must be <code>* -> *</code>. For example, <code>Maybe</code> is such a type with kind <code>* -> *</code>: <code>Maybe</code> is not a concrete type by itself (that is, there are no values of type <code>Maybe</code>), but requires another type as a parameter, like <code>Maybe Integer</code>. So it would not make sense to say <code>instance Functor Integer</code>, but it could make sense to say <code>instance Functor Maybe</code>. |

Now look at the type of <code>fmap</code>: it takes any function from <code>a</code> to <code>b</code>, and a value of type <code>f a</code>, and outputs a value of type <code>f b</code>. From the container point of view, the intention is that <code>fmap</code> applies a function to each element of a container, without altering the structure of the container. From the context point of view, the intention is that <code>fmap</code> applies a function to a value without altering its context. Let’s look at a few specific examples. |

Now look at the type of <code>fmap</code>: it takes any function from <code>a</code> to <code>b</code>, and a value of type <code>f a</code>, and outputs a value of type <code>f b</code>. From the container point of view, the intention is that <code>fmap</code> applies a function to each element of a container, without altering the structure of the container. From the context point of view, the intention is that <code>fmap</code> applies a function to a value without altering its context. Let’s look at a few specific examples. |

||

| + | |||

| + | Finally, we can understand <code>(<$)</code>: instead of applying a function to the values a container/context, it simply replaces them with a given value. This is the same as applying a constant function, so <code>(<$)</code> can be implemented in terms of <code>fmap</code>. |

||

==Instances== |

==Instances== |

||

| Line 87: | Line 92: | ||

<haskell> |

<haskell> |

||

instance Functor [] where |

instance Functor [] where |

||

| + | fmap :: (a -> b) -> [a] -> [b] |

||

fmap _ [] = [] |

fmap _ [] = [] |

||

fmap g (x:xs) = g x : fmap g xs |

fmap g (x:xs) = g x : fmap g xs |

||

| Line 92: | Line 98: | ||

instance Functor Maybe where |

instance Functor Maybe where |

||

| + | fmap :: (a -> b) -> Maybe a -> Maybe b |

||

fmap _ Nothing = Nothing |

fmap _ Nothing = Nothing |

||

fmap g (Just a) = Just (g a) |

fmap g (Just a) = Just (g a) |

||

| Line 98: | Line 105: | ||

As an aside, in idiomatic Haskell code you will often see the letter <code>f</code> used to stand for both an arbitrary <code>Functor</code> and an arbitrary function. In this document, <code>f</code> represents only <code>Functor</code>s, and <code>g</code> or <code>h</code> always represent functions, but you should be aware of the potential confusion. In practice, what <code>f</code> stands for should always be clear from the context, by noting whether it is part of a type or part of the code. |

As an aside, in idiomatic Haskell code you will often see the letter <code>f</code> used to stand for both an arbitrary <code>Functor</code> and an arbitrary function. In this document, <code>f</code> represents only <code>Functor</code>s, and <code>g</code> or <code>h</code> always represent functions, but you should be aware of the potential confusion. In practice, what <code>f</code> stands for should always be clear from the context, by noting whether it is part of a type or part of the code. |

||

| − | There are other <code>Functor</code> instances in the standard |

+ | There are other <code>Functor</code> instances in the standard library as well: |

* <code>Either e</code> is an instance of <code>Functor</code>; <code>Either e a</code> represents a container which can contain either a value of type <code>a</code>, or a value of type <code>e</code> (often representing some sort of error condition). It is similar to <code>Maybe</code> in that it represents possible failure, but it can carry some extra information about the failure as well. |

* <code>Either e</code> is an instance of <code>Functor</code>; <code>Either e a</code> represents a container which can contain either a value of type <code>a</code>, or a value of type <code>e</code> (often representing some sort of error condition). It is similar to <code>Maybe</code> in that it represents possible failure, but it can carry some extra information about the failure as well. |

||

| Line 126: | Line 133: | ||

</haskell> |

</haskell> |

||

</li> |

</li> |

||

| − | <li>Give an example of a type which cannot be made an instance of <code>Functor</code> (without using <code>undefined</code>). |

+ | <li>Give an example of a type of kind <code>* -> *</code> which cannot be made an instance of <code>Functor</code> (without using <code>undefined</code>). |

</li> |

</li> |

||

<li>Is this statement true or false? |

<li>Is this statement true or false? |

||

| Line 157: | Line 164: | ||

-- Evil Functor instance |

-- Evil Functor instance |

||

instance Functor [] where |

instance Functor [] where |

||

| + | fmap :: (a -> b) -> [a] -> [b] |

||

fmap _ [] = [] |

fmap _ [] = [] |

||

fmap g (x:xs) = g x : g x : fmap g xs |

fmap g (x:xs) = g x : g x : fmap g xs |

||

| Line 165: | Line 173: | ||

Unlike some other type classes we will encounter, a given type has at most one valid instance of <code>Functor</code>. This [http://article.gmane.org/gmane.comp.lang.haskell.libraries/15384 can be proven] via the [http://homepages.inf.ed.ac.uk/wadler/topics/parametricity.html#free ''free theorem''] for the type of <code>fmap</code>. In fact, [http://byorgey.wordpress.com/2010/03/03/deriving-pleasure-from-ghc-6-12-1/ GHC can automatically derive] <code>Functor</code> instances for many data types. |

Unlike some other type classes we will encounter, a given type has at most one valid instance of <code>Functor</code>. This [http://article.gmane.org/gmane.comp.lang.haskell.libraries/15384 can be proven] via the [http://homepages.inf.ed.ac.uk/wadler/topics/parametricity.html#free ''free theorem''] for the type of <code>fmap</code>. In fact, [http://byorgey.wordpress.com/2010/03/03/deriving-pleasure-from-ghc-6-12-1/ GHC can automatically derive] <code>Functor</code> instances for many data types. |

||

| + | {{note|Actually, if <code>seq</code>/<code>undefined</code> are considered, it [http://stackoverflow.com/a/8323243/305559 is possible] to have an implementation which satisfies the first law but not the second. The rest of the comments in this section should be considered in a context where <code>seq</code> and <code>undefined</code> are excluded.}} |

||

| − | A similar argument also shows that any <code>Functor</code> instance satisfying the first law (<code>fmap id = id</code>) will automatically satisfy the second law as well. Practically, this means that only the first law needs to be checked (usually by a very straightforward induction) to ensure that a <code>Functor</code> instance is valid. |

||

| + | |||

| + | A [https://github.com/quchen/articles/blob/master/second_functor_law.md similar argument also shows] that any <code>Functor</code> instance satisfying the first law (<code>fmap id = id</code>) will automatically satisfy the second law as well. Practically, this means that only the first law needs to be checked (usually by a very straightforward induction) to ensure that a <code>Functor</code> instance is valid.{{noteref}} |

||

{{Exercises| |

{{Exercises| |

||

| − | # Although it is not possible for a <code>Functor</code> instance to satisfy the first <code>Functor</code> law but not the second, the reverse is possible. Give an example of a (bogus) <code>Functor</code> instance which satisfies the second law but not the first. |

+ | # Although it is not possible for a <code>Functor</code> instance to satisfy the first <code>Functor</code> law but not the second (excluding <code>undefined</code>), the reverse is possible. Give an example of a (bogus) <code>Functor</code> instance which satisfies the second law but not the first. |

# Which laws are violated by the evil <code>Functor</code> instance for list shown above: both laws, or the first law alone? Give specific counterexamples. |

# Which laws are violated by the evil <code>Functor</code> instance for list shown above: both laws, or the first law alone? Give specific counterexamples. |

||

}} |

}} |

||

| Line 177: | Line 187: | ||

Just like all other Haskell functions of “more than one parameter”, however, <code>fmap</code> is actually ''curried'': it does not really take two parameters, but takes a single parameter and returns a function. For emphasis, we can write <code>fmap</code>’s type with extra parentheses: <code>fmap :: (a -> b) -> (f a -> f b)</code>. Written in this form, it is apparent that <code>fmap</code> transforms a “normal” function (<code>g :: a -> b</code>) into one which operates over containers/contexts (<code>fmap g :: f a -> f b</code>). This transformation is often referred to as a ''lift''; <code>fmap</code> “lifts” a function from the “normal world” into the “<code>f</code> world”. |

Just like all other Haskell functions of “more than one parameter”, however, <code>fmap</code> is actually ''curried'': it does not really take two parameters, but takes a single parameter and returns a function. For emphasis, we can write <code>fmap</code>’s type with extra parentheses: <code>fmap :: (a -> b) -> (f a -> f b)</code>. Written in this form, it is apparent that <code>fmap</code> transforms a “normal” function (<code>g :: a -> b</code>) into one which operates over containers/contexts (<code>fmap g :: f a -> f b</code>). This transformation is often referred to as a ''lift''; <code>fmap</code> “lifts” a function from the “normal world” into the “<code>f</code> world”. |

||

| + | |||

| + | ==Utility functions== |

||

| + | |||

| + | There are a few more <code>Functor</code>-related functions which can be imported from the <code>Data.Functor</code> module. |

||

| + | |||

| + | * <code>(<$>)</code> is defined as a synonym for <code>fmap</code>. This enables a nice infix style that mirrors the <code>($)</code> operator for function application. For example, <code>f $ 3</code> applies the function <code>f</code> to 3, whereas <code>f <$> [1,2,3]</code> applies <code>f</code> to each member of the list. |

||

| + | * <code>($>) :: Functor f => f a -> b -> f b</code> is just <code>flip (<$)</code>, and can occasionally be useful. To keep them straight, you can remember that <code>(<$)</code> and <code>($>)</code> point towards the value that will be kept. |

||

| + | * <code>void :: Functor f => f a -> f ()</code> is a specialization of <code>(<$)</code>, that is, <code>void x = () <$ x</code>. This can be used in cases where a computation computes some value but the value should be ignored. |

||

==Further reading== |

==Further reading== |

||

| Line 184: | Line 202: | ||

=Applicative= |

=Applicative= |

||

| − | A somewhat newer addition to the pantheon of standard Haskell type classes, ''applicative functors'' represent an abstraction lying in between <code>Functor</code> and <code>Monad</code> in expressivity, first described by McBride and Paterson. The title of their classic paper, [http://www.soi.city.ac.uk/~ross/papers/Applicative.html Applicative Programming with Effects], gives a hint at the intended intuition behind the [ |

+ | A somewhat newer addition to the pantheon of standard Haskell type classes, ''applicative functors'' represent an abstraction lying in between <code>Functor</code> and <code>Monad</code> in expressivity, first described by McBride and Paterson. The title of their classic paper, [http://www.soi.city.ac.uk/~ross/papers/Applicative.html Applicative Programming with Effects], gives a hint at the intended intuition behind the [{{HackageDocs|base|Control-Applicative}} <code>Applicative</code>] type class. It encapsulates certain sorts of “effectful” computations in a functionally pure way, and encourages an “applicative” programming style. Exactly what these things mean will be seen later. |

==Definition== |

==Definition== |

||

| − | Recall that <code>Functor</code> allows us to lift a “normal” function to a function on computational contexts. But <code>fmap</code> doesn’t allow us to apply a function which is itself in a context to a value in |

+ | Recall that <code>Functor</code> allows us to lift a “normal” function to a function on computational contexts. But <code>fmap</code> doesn’t allow us to apply a function which is itself in a context to a value in a context. <code>Applicative</code> gives us just such a tool, <code>(<*>)</code> (variously pronounced as "apply", "app", or "splat"). It also provides a method, <code>pure</code>, for embedding values in a default, “effect free” context. Here is the type class declaration for <code>Applicative</code>, as defined in <code>Control.Applicative</code>: |

<haskell> |

<haskell> |

||

class Functor f => Applicative f where |

class Functor f => Applicative f where |

||

pure :: a -> f a |

pure :: a -> f a |

||

| + | infixl 4 <*>, *>, <* |

||

(<*>) :: f (a -> b) -> f a -> f b |

(<*>) :: f (a -> b) -> f a -> f b |

||

| + | |||

| + | (*>) :: f a -> f b -> f b |

||

| + | a1 *> a2 = (id <$ a1) <*> a2 |

||

| + | |||

| + | (<*) :: f a -> f b -> f a |

||

| + | (<*) = liftA2 const |

||

</haskell> |

</haskell> |

||

Note that every <code>Applicative</code> must also be a <code>Functor</code>. In fact, as we will see, <code>fmap</code> can be implemented using the <code>Applicative</code> methods, so every <code>Applicative</code> is a functor whether we like it or not; the <code>Functor</code> constraint forces us to be honest. |

Note that every <code>Applicative</code> must also be a <code>Functor</code>. In fact, as we will see, <code>fmap</code> can be implemented using the <code>Applicative</code> methods, so every <code>Applicative</code> is a functor whether we like it or not; the <code>Functor</code> constraint forces us to be honest. |

||

| + | |||

| + | <code>(*>)</code> and <code>(<*)</code> are provided for convenience, in case a particular instance of <code>Applicative</code> can provide more efficient implementations, but they are provided with default implementations. For more on these operators, see the section on [[#Utility functions|Utility functions]] below. |

||

{{note|Recall that <code>($)</code> is just function application: <code>f $ x {{=}} f x</code>.}} |

{{note|Recall that <code>($)</code> is just function application: <code>f $ x {{=}} f x</code>.}} |

||

| Line 209: | Line 236: | ||

{{note|See |

{{note|See |

||

| − | [ |

+ | [{{HackageDocs|base|Control-Applicative}} haddock for Applicative] and [http://www.soi.city.ac.uk/~ross/papers/Applicative.html Applicative programming with effects]}} |

| − | + | Traditionally, there are four laws that <code>Applicative</code> instances should satisfy {{noteref}}. In some sense, they are all concerned with making sure that <code>pure</code> deserves its name: |

|

| + | |||

| + | * The identity law:<br /><haskell>pure id <*> v = v</haskell> |

||

| + | * Homomorphism:<br /><haskell>pure f <*> pure x = pure (f x)</haskell>Intuitively, applying a non-effectful function to a non-effectful argument in an effectful context is the same as just applying the function to the argument and then injecting the result into the context with <code>pure</code>. |

||

| + | * Interchange:<br /><haskell>u <*> pure y = pure ($ y) <*> u</haskell>Intuitively, this says that when evaluating the application of an effectful function to a pure argument, the order in which we evaluate the function and its argument doesn't matter. |

||

| + | * Composition:<br /><haskell>u <*> (v <*> w) = pure (.) <*> u <*> v <*> w </haskell>This one is the trickiest law to gain intuition for. In some sense it is expressing a sort of associativity property of <code>(<*>)</code>. The reader may wish to simply convince themselves that this law is type-correct. |

||

| + | |||

| + | Considered as left-to-right rewrite rules, the homomorphism, interchange, and composition laws actually constitute an algorithm for transforming any expression using <code>pure</code> and <code>(<*>)</code> into a canonical form with only a single use of <code>pure</code> at the very beginning and only left-nested occurrences of <code>(<*>)</code>. Composition allows reassociating <code>(<*>)</code>; interchange allows moving occurrences of <code>pure</code> leftwards; and homomorphism allows collapsing multiple adjacent occurrences of <code>pure</code> into one. |

||

| + | |||

| + | There is also a law specifying how <code>Applicative</code> should relate to <code>Functor</code>: |

||

<haskell> |

<haskell> |

||

| Line 217: | Line 253: | ||

</haskell> |

</haskell> |

||

| − | It says that mapping a pure function <code>g</code> over a context <code>x</code> is the same as first injecting <code>g</code> into a context with <code>pure</code>, and then applying it to <code>x</code> with <code>(<*>)</code>. In other words, we can decompose <code>fmap</code> into two more atomic operations: injection into a context, and application within a context. |

+ | It says that mapping a pure function <code>g</code> over a context <code>x</code> is the same as first injecting <code>g</code> into a context with <code>pure</code>, and then applying it to <code>x</code> with <code>(<*>)</code>. In other words, we can decompose <code>fmap</code> into two more atomic operations: injection into a context, and application within a context. Since <code>(<$>)</code> is a synonym for <code>fmap</code>, the above law can also be expressed as: |

<code>g <$> x = pure g <*> x</code>. |

<code>g <$> x = pure g <*> x</code>. |

||

| + | |||

| + | {{Exercises| |

||

| + | # (Tricky) One might imagine a variant of the interchange law that says something about applying a pure function to an effectful argument. Using the above laws, prove that<haskell>pure f <*> x = pure (flip ($)) <*> x <*> pure f</haskell> |

||

| + | }} |

||

==Instances== |

==Instances== |

||

| Line 235: | Line 275: | ||

instance Applicative ZipList where |

instance Applicative ZipList where |

||

| + | pure :: a -> ZipList a |

||

pure = undefined -- exercise |

pure = undefined -- exercise |

||

| + | |||

| + | (<*>) :: ZipList (a -> b) -> ZipList a -> ZipList b |

||

(ZipList gs) <*> (ZipList xs) = ZipList (zipWith ($) gs xs) |

(ZipList gs) <*> (ZipList xs) = ZipList (zipWith ($) gs xs) |

||

</haskell> |

</haskell> |

||

| Line 245: | Line 288: | ||

<haskell> |

<haskell> |

||

instance Applicative [] where |

instance Applicative [] where |

||

| − | pure |

+ | pure :: a -> [a] |

| + | pure x = [x] |

||

| + | |||

| + | (<*>) :: [a -> b] -> [a] -> [b] |

||

gs <*> xs = [ g x | g <- gs, x <- xs ] |

gs <*> xs = [ g x | g <- gs, x <- xs ] |

||

</haskell> |

</haskell> |

||

| Line 300: | Line 346: | ||

x1 :: f a |

x1 :: f a |

||

x2 :: b |

x2 :: b |

||

| − | + | x3 :: f c |

|

</haskell> |

</haskell> |

||

The double brackets are commonly known as “idiom brackets”, because they allow writing “idiomatic” function application, that is, function application that looks normal but has some special, non-standard meaning (determined by the particular instance of <code>Applicative</code> being used). Idiom brackets are not supported by GHC, but they are supported by the [http://personal.cis.strath.ac.uk/~conor/pub/she/ Strathclyde Haskell Enhancement], a preprocessor which (among many other things) translates idiom brackets into standard uses of <code>(<$>)</code> and <code>(<*>)</code>. This can result in much more readable code when making heavy use of <code>Applicative</code>. |

The double brackets are commonly known as “idiom brackets”, because they allow writing “idiomatic” function application, that is, function application that looks normal but has some special, non-standard meaning (determined by the particular instance of <code>Applicative</code> being used). Idiom brackets are not supported by GHC, but they are supported by the [http://personal.cis.strath.ac.uk/~conor/pub/she/ Strathclyde Haskell Enhancement], a preprocessor which (among many other things) translates idiom brackets into standard uses of <code>(<$>)</code> and <code>(<*>)</code>. This can result in much more readable code when making heavy use of <code>Applicative</code>. |

||

| + | In addition, as of GHC 8, the <code>ApplicativeDo</code> extension enables <code>g <$> x1 <*> x2 <*> ... <*> xn</code> to be written in a different style: |

||

| − | ==Further reading== |

||

| + | <haskell> |

||

| + | do v1 <- x1 |

||

| + | v2 <- x2 |

||

| + | ... |

||

| + | vn <- xn |

||

| + | pure (g v1 v2 ... vn) |

||

| + | </haskell> |

||

| + | See the Further Reading section below as well as the discussion of do-notation in the Monad section for more information. |

||

| + | ==Utility functions== |

||

| − | There are many other useful combinators in the standard libraries implemented in terms of <code>pure</code> and <code>(<*>)</code>: for example, <code>(*>)</code>, <code>(<*)</code>, <code>(<**>)</code>, <code>(<$)</code>, and so on (see [http://haskell.org/ghc/docs/latest/html/libraries/base/Control-Applicative.html haddock for Applicative]). Judicious use of such secondary combinators can often make code using <code>Applicative</code>s much easier to read. |

||

| + | |||

| + | <code>Control.Applicative</code> provides several utility functions that work generically with any <code>Applicative</code> instance. |

||

| + | |||

| + | * <code>liftA :: Applicative f => (a -> b) -> f a -> f b</code>. This should be familiar; of course, it is the same as <code>fmap</code> (and hence also the same as <code>(<$>)</code>), but with a more restrictive type. This probably exists to provide a parallel to <code>liftA2</code> and <code>liftA3</code>, but there is no reason you should ever need to use it. |

||

| + | |||

| + | * <code>liftA2 :: Applicative f => (a -> b -> c) -> f a -> f b -> f c</code> lifts a 2-argument function to operate in the context of some <code>Applicative</code>. When <code>liftA2</code> is fully applied, as in <code>liftA2 f arg1 arg2</code>,it is typically better style to instead use <code>f <$> arg1 <*> arg2</code>. However, <code>liftA2</code> can be useful in situations where it is partially applied. For example, one could define a <code>Num</code> instance for <code>Maybe Integer</code> by defining <code>(+) = liftA2 (+)</code> and so on. |

||

| + | |||

| + | * There is a <code>liftA3</code> but no <code>liftAn</code> for larger <code>n</code>. |

||

| + | |||

| + | * <code>(*>) :: Applicative f => f a -> f b -> f b</code> sequences the effects of two <code>Applicative</code> computations, but discards the result of the first. For example, if <code>m1, m2 :: Maybe Int</code>, then <code>m1 *> m2</code> is <code>Nothing</code> whenever either <code>m1</code> or <code>m2</code> is <code>Nothing</code>; but if not, it will have the same value as <code>m2</code>. |

||

| + | |||

| + | * Likewise, <code>(<*) :: Applicative f => f a -> f b -> f a</code> sequences the effects of two computations, but keeps only the result of the first, discarding the result of the second. Just as with <code>(<$)</code> and <code>($>)</code>, to keep <code>(<*)</code> and <code>(*>)</code> straight, remember that they point towards the values that will be kept. |

||

| + | |||

| + | * <code>(<**>) :: Applicative f => f a -> f (a -> b) -> f b</code> is similar to <code>(<*>)</code>, but where the first computation produces value(s) which are provided as input to the function(s) produced by the second computation. Note this is not the same as <code>flip (<*>)</code>, because the effects are performed in the opposite order. This is possible to observe with any <code>Applicative</code> instance with non-commutative effects, such as the instance for lists: <code>(<**>) [1,2] [(+5),(*10)]</code> produces a different result than <code>(flip (<*>))</code> on the same arguments. |

||

| + | |||

| + | * <code>when :: Applicative f => Bool -> f () -> f ()</code> conditionally executes a computation, evaluating to its second argument if the test is <code>True</code>, and to <code>pure ()</code> if the test is <code>False</code>. |

||

| + | |||

| + | * <code>unless :: Applicative f => Bool -> f () -> f ()</code> is like <code>when</code>, but with the test negated. |

||

| + | |||

| + | * The <code>guard</code> function is for use with instances of <code>Alternative</code> (an extension of <code>Applicative</code> to incorporate the ideas of failure and choice), which is discussed in the [[#Failure_and_choice:_Alternative.2C_MonadPlus.2C_ArrowPlus|section on <code>Alternative</code> and friends]]. |

||

| + | |||

| + | {{Exercises| |

||

| + | # Implement a function <haskell>sequenceAL :: Applicative f => [f a] -> f [a]</haskell>. There is a generalized version of this, <code>sequenceA</code>, which works for any <code>Traversable</code> (see the later section on Traversable), but implementing this version specialized to lists is a good exercise. |

||

| + | }} |

||

| + | |||

| + | ==Alternative formulation== |

||

| + | |||

| + | An alternative, equivalent formulation of <code>Applicative</code> is given by |

||

| + | |||

| + | <haskell> |

||

| + | class Functor f => Monoidal f where |

||

| + | unit :: f () |

||

| + | (**) :: f a -> f b -> f (a,b) |

||

| + | </haskell> |

||

| + | |||

| + | {{note|In category-theory speak, we say <code>f</code> is a ''lax'' monoidal functor because there aren't necessarily functions in the other direction, like <code>f (a, b) -> (f a, f b)</code>.}} |

||

| + | Intuitively, this states that a <i>monoidal</i> functor{{noteref}} is one which has some sort of "default shape" and which supports some sort of "combining" operation. <code>pure</code> and <code>(<*>)</code> are equivalent in power to <code>unit</code> and <code>(**)</code> (see the Exercises below). More technically, the idea is that <code>f</code> preserves the "monoidal structure" given by the pairing constructor <code>(,)</code> and unit type <code>()</code>. This can be seen even more clearly if we rewrite the types of <code>unit</code> and <code>(**)</code> as |

||

| + | <haskell> |

||

| + | unit' :: () -> f () |

||

| + | (**') :: (f a, f b) -> f (a, b) |

||

| + | </haskell> |

||

| + | |||

| + | Furthermore, to deserve the name "monoidal" (see the [[#Monoid|section on Monoids]]), instances of <code>Monoidal</code> ought to satisfy the following laws, which seem much more straightforward than the traditional <code>Applicative</code> laws: |

||

| + | |||

| + | {{note|In this and the following laws, <code>≅</code> refers to <i>isomorphism</i> rather than equality. In particular we consider <code>(x,()) ≅ x ≅ ((),x)</code> and <code>((x,y),z) ≅ (x,(y,z))</code>.}} |

||

| + | * Left identity{{noteref}}: <haskell>unit ** v ≅ v</haskell> |

||

| + | * Right identity: <haskell>u ** unit ≅ u</haskell> |

||

| + | * Associativity: <haskell>u ** (v ** w) ≅ (u ** v) ** w</haskell> |

||

| + | |||

| + | These turn out to be equivalent to the usual <code>Applicative</code> laws. In a category theory setting, one would also require a naturality law: |

||

| + | |||

| + | {{note|Here <code>g *** h {{=}} \(x,y) -> (g x, h y)</code>. See [[#Arrow|Arrows]].}} |

||

| + | * Naturality: <haskell>fmap (g *** h) (u ** v) = fmap g u ** fmap h v</haskell> |

||

| + | |||

| + | but in the context of Haskell, this is a free theorem. |

||

| + | |||

| + | Much of this section was taken from [http://blog.ezyang.com/2012/08/applicative-functors/ a blog post by Edward Z. Yang]; see his actual post for a bit more information. |

||

| + | |||

| + | {{Exercises| |

||

| + | # Implement <code>pure</code> and <code>(<*>)</code> in terms of <code>unit</code> and <code>(**)</code>, and vice versa. |

||

| + | # Are there any <code>Applicative</code> instances for which there are also functions <code>f () -> ()</code> and <code>f (a,b) -> (f a, f b)</code>, satisfying some "reasonable" laws? |

||

| + | # (Tricky) Prove that given your implementations from the first exercise, the usual <code>Applicative</code> laws and the <code>Monoidal</code> laws stated above are equivalent. |

||

| + | }} |

||

| + | |||

| + | ==Further reading== |

||

[http://www.soi.city.ac.uk/~ross/papers/Applicative.html McBride and Paterson’s original paper] is a treasure-trove of information and examples, as well as some perspectives on the connection between <code>Applicative</code> and category theory. Beginners will find it difficult to make it through the entire paper, but it is extremely well-motivated—even beginners will be able to glean something from reading as far as they are able. |

[http://www.soi.city.ac.uk/~ross/papers/Applicative.html McBride and Paterson’s original paper] is a treasure-trove of information and examples, as well as some perspectives on the connection between <code>Applicative</code> and category theory. Beginners will find it difficult to make it through the entire paper, but it is extremely well-motivated—even beginners will be able to glean something from reading as far as they are able. |

||

| − | {{note|Introduced by [http://conal.net/papers/simply-reactive/ an earlier paper] that was since |

+ | {{note|Introduced by [http://conal.net/papers/simply-reactive/ an earlier paper] that was since superseded by [http://conal.net/papers/push-pull-frp/ Push-pull functional reactive programming].}} |

Conal Elliott has been one of the biggest proponents of <code>Applicative</code>. For example, the [http://conal.net/papers/functional-images/ Pan library for functional images] and the reactive library for functional reactive programming (FRP) {{noteref}} make key use of it; his blog also contains [http://conal.net/blog/tag/applicative-functor many examples of <code>Applicative</code> in action]. Building on the work of McBride and Paterson, Elliott also built the [[TypeCompose]] library, which embodies the observation (among others) that <code>Applicative</code> types are closed under composition; therefore, <code>Applicative</code> instances can often be automatically derived for complex types built out of simpler ones. |

Conal Elliott has been one of the biggest proponents of <code>Applicative</code>. For example, the [http://conal.net/papers/functional-images/ Pan library for functional images] and the reactive library for functional reactive programming (FRP) {{noteref}} make key use of it; his blog also contains [http://conal.net/blog/tag/applicative-functor many examples of <code>Applicative</code> in action]. Building on the work of McBride and Paterson, Elliott also built the [[TypeCompose]] library, which embodies the observation (among others) that <code>Applicative</code> types are closed under composition; therefore, <code>Applicative</code> instances can often be automatically derived for complex types built out of simpler ones. |

||

| Line 317: | Line 436: | ||

Although the [http://hackage.haskell.org/package/parsec Parsec parsing library] ([http://legacy.cs.uu.nl/daan/download/papers/parsec-paper.pdf paper]) was originally designed for use as a monad, in its most common use cases an <code>Applicative</code> instance can be used to great effect; [http://www.serpentine.com/blog/2008/02/06/the-basics-of-applicative-functors-put-to-practical-work/ Bryan O’Sullivan’s blog post] is a good starting point. If the extra power provided by <code>Monad</code> isn’t needed, it’s usually a good idea to use <code>Applicative</code> instead. |

Although the [http://hackage.haskell.org/package/parsec Parsec parsing library] ([http://legacy.cs.uu.nl/daan/download/papers/parsec-paper.pdf paper]) was originally designed for use as a monad, in its most common use cases an <code>Applicative</code> instance can be used to great effect; [http://www.serpentine.com/blog/2008/02/06/the-basics-of-applicative-functors-put-to-practical-work/ Bryan O’Sullivan’s blog post] is a good starting point. If the extra power provided by <code>Monad</code> isn’t needed, it’s usually a good idea to use <code>Applicative</code> instead. |

||

| − | A couple other nice examples of <code>Applicative</code> in action include the [http://chrisdone.com/blog/html/2009-02-10-applicative-configfile-hsql.html ConfigFile and HSQL libraries] and the [http://groups.inf.ed.ac.uk/links/formlets/ formlets library]. |

+ | A couple other nice examples of <code>Applicative</code> in action include the [http://web.archive.org/web/20090416111947/chrisdone.com/blog/html/2009-02-10-applicative-configfile-hsql.html ConfigFile and HSQL libraries] and the [http://groups.inf.ed.ac.uk/links/formlets/ formlets library]. |

| + | |||

| + | Gershom Bazerman's [http://comonad.com/reader/2012/abstracting-with-applicatives/ post] contains many insights into applicatives. |

||

| + | |||

| + | The <code>ApplicativeDo</code> extension is described in [https://ghc.haskell.org/trac/ghc/wiki/ApplicativeDo this wiki page], and in more detail in [http://doi.org/10.1145/2976002.2976007 this Haskell Symposium paper]. |

||

=Monad= |

=Monad= |

||

| Line 324: | Line 447: | ||

* Haskell does, in fact, single out monads for special attention by making them the framework in which to construct I/O operations. |

* Haskell does, in fact, single out monads for special attention by making them the framework in which to construct I/O operations. |

||

| − | * Haskell also singles out monads for special attention by providing a special syntactic sugar for monadic expressions: the <code>do</code>-notation. |

+ | * Haskell also singles out monads for special attention by providing a special syntactic sugar for monadic expressions: the <code>do</code>-notation. (As of GHC 8, <code>do</code>-notation can be used with <code>Applicative</code> as well, but the notation is still fundamentally related to monads.) |

* <code>Monad</code> has been around longer than other abstract models of computation such as <code>Applicative</code> or <code>Arrow</code>. |

* <code>Monad</code> has been around longer than other abstract models of computation such as <code>Applicative</code> or <code>Arrow</code>. |

||

* The more monad tutorials there are, the harder people think monads must be, and the more new monad tutorials are written by people who think they finally “get” monads (the [http://byorgey.wordpress.com/2009/01/12/abstraction-intuition-and-the-monad-tutorial-fallacy/ monad tutorial fallacy]). |

* The more monad tutorials there are, the harder people think monads must be, and the more new monad tutorials are written by people who think they finally “get” monads (the [http://byorgey.wordpress.com/2009/01/12/abstraction-intuition-and-the-monad-tutorial-fallacy/ monad tutorial fallacy]). |

||

| Line 333: | Line 456: | ||

==Definition== |

==Definition== |

||

| + | As of GHC 7.10, [{{HackageDocs|base|Prelude}}#t:Monad <code>Monad</code>] is defined as: |

||

| − | |||

| − | The type class declaration for [http://haskell.org/ghc/docs/latest/html/libraries/base/Prelude.html#t:Monad <code>Monad</code>] is: |

||

<haskell> |

<haskell> |

||

| − | class Monad m where |

+ | class Applicative m => Monad m where |

return :: a -> m a |

return :: a -> m a |

||

(>>=) :: m a -> (a -> m b) -> m b |

(>>=) :: m a -> (a -> m b) -> m b |

||

| Line 346: | Line 468: | ||

</haskell> |

</haskell> |

||

| + | (Prior to GHC 7.10, <code>Applicative</code> was not a superclass of <code>Monad</code>, for historical reasons.) |

||

| − | The <code>Monad</code> type class is exported by the <code>Prelude</code>, along with a few standard instances. However, many utility functions are found in [http://haskell.org/ghc/docs/latest/html/libraries/base/Control-Monad.html <code>Control.Monad</code>], and there are also several instances (such as <code>((->) e)</code>) defined in [http://haskell.org/ghc/docs/latest/html/libraries/base/Control-Monad-Instances.html <code>Control.Monad.Instances</code>]. |

||

| + | The <code>Monad</code> type class is exported by the <code>Prelude</code>, along with a few standard instances. However, many utility functions are found in [{{HackageDocs|base|Control-Monad}} <code>Control.Monad</code>]. |

||

| − | Let’s examine the methods in the <code>Monad</code> class one by one. The type of <code>return</code> should look familiar; it’s the same as <code>pure</code>. Indeed, <code>return</code> ''is'' <code>pure</code>, but with an unfortunate name. (Unfortunate, since someone coming from an imperative programming background might think that <code>return</code> is like the C or Java keyword of the same name, when in fact the similarities are minimal.) From a mathematical point of view, every monad is an applicative functor, but for historical reasons, the <code>Monad</code> type class declaration unfortunately does not require this. |

||

| + | |||

| + | Let’s examine the methods in the <code>Monad</code> class one by one. The type of <code>return</code> should look familiar; it’s the same as <code>pure</code>. Indeed, <code>return</code> ''is'' <code>pure</code>, but with an unfortunate name. (Unfortunate, since someone coming from an imperative programming background might think that <code>return</code> is like the C or Java keyword of the same name, when in fact the similarities are minimal.) For historical reasons, we still have both names, but they should always denote the same value (although this cannot be enforced). Likewise, <code>(>>)</code> should be the same as <code>(*>)</code> from <code>Applicative</code>. It is possible that <code>return</code> and <code>(>>)</code> may eventually be removed from the <code>Monad</code> class: see the [https://ghc.haskell.org/trac/ghc/wiki/Proposal/MonadOfNoReturn Monad of No Return proposal]. |

||

We can see that <code>(>>)</code> is a specialized version of <code>(>>=)</code>, with a default implementation given. It is only included in the type class declaration so that specific instances of <code>Monad</code> can override the default implementation of <code>(>>)</code> with a more efficient one, if desired. Also, note that although <code>_ >> n = n</code> would be a type-correct implementation of <code>(>>)</code>, it would not correspond to the intended semantics: the intention is that <code>m >> n</code> ignores the ''result'' of <code>m</code>, but not its ''effects''. |

We can see that <code>(>>)</code> is a specialized version of <code>(>>=)</code>, with a default implementation given. It is only included in the type class declaration so that specific instances of <code>Monad</code> can override the default implementation of <code>(>>)</code> with a more efficient one, if desired. Also, note that although <code>_ >> n = n</code> would be a type-correct implementation of <code>(>>)</code>, it would not correspond to the intended semantics: the intention is that <code>m >> n</code> ignores the ''result'' of <code>m</code>, but not its ''effects''. |

||

| Line 354: | Line 478: | ||

The <code>fail</code> function is an awful hack that has no place in the <code>Monad</code> class; more on this later. |

The <code>fail</code> function is an awful hack that has no place in the <code>Monad</code> class; more on this later. |

||

| − | The only really interesting thing to look at—and what makes <code>Monad</code> strictly more powerful than <code>Applicative</code>—is <code>(>>=)</code>, which is often called ''bind''. |

+ | The only really interesting thing to look at—and what makes <code>Monad</code> strictly more powerful than <code>Applicative</code>—is <code>(>>=)</code>, which is often called ''bind''. |

| − | |||

| − | <haskell> |

||

| − | class Applicative m => Monad' m where |

||

| − | (>>=) :: m a -> (a -> m b) -> m b |

||

| − | </haskell> |

||

We could spend a while talking about the intuition behind <code>(>>=)</code>—and we will. But first, let’s look at some examples. |

We could spend a while talking about the intuition behind <code>(>>=)</code>—and we will. But first, let’s look at some examples. |

||

| Line 380: | Line 499: | ||

<haskell> |

<haskell> |

||

instance Monad Maybe where |

instance Monad Maybe where |

||

| + | return :: a -> Maybe a |

||

return = Just |

return = Just |

||

| + | |||

| + | (>>=) :: Maybe a -> (a -> Maybe b) -> Maybe b |

||

(Just x) >>= g = g x |

(Just x) >>= g = g x |

||

Nothing >>= _ = Nothing |

Nothing >>= _ = Nothing |

||

| Line 427: | Line 549: | ||

Let’s look more closely at the type of <code>(>>=)</code>. The basic intuition is that it combines two computations into one larger computation. The first argument, <code>m a</code>, is the first computation. However, it would be boring if the second argument were just an <code>m b</code>; then there would be no way for the computations to interact with one another (actually, this is exactly the situation with <code>Applicative</code>). So, the second argument to <code>(>>=)</code> has type <code>a -> m b</code>: a function of this type, given a ''result'' of the first computation, can produce a second computation to be run. In other words, <code>x >>= k</code> is a computation which runs <code>x</code>, and then uses the result(s) of <code>x</code> to ''decide'' what computation to run second, using the output of the second computation as the result of the entire computation. |

Let’s look more closely at the type of <code>(>>=)</code>. The basic intuition is that it combines two computations into one larger computation. The first argument, <code>m a</code>, is the first computation. However, it would be boring if the second argument were just an <code>m b</code>; then there would be no way for the computations to interact with one another (actually, this is exactly the situation with <code>Applicative</code>). So, the second argument to <code>(>>=)</code> has type <code>a -> m b</code>: a function of this type, given a ''result'' of the first computation, can produce a second computation to be run. In other words, <code>x >>= k</code> is a computation which runs <code>x</code>, and then uses the result(s) of <code>x</code> to ''decide'' what computation to run second, using the output of the second computation as the result of the entire computation. |

||

| + | {{note|Actually, because Haskell allows general recursion, one can recursively construct ''infinite'' grammars, and hence <code>Applicative</code> (together with <code>Alternative</code>) is enough to parse any context-sensitive language with a finite alphabet. See [http://byorgey.wordpress.com/2012/01/05/parsing-context-sensitive-languages-with-applicative/ Parsing context-sensitive languages with Applicative].}} |

||

| − | Intuitively, it is this ability to use the output from previous computations to decide what computations to run next that makes <code>Monad</code> more powerful than <code>Applicative</code>. The structure of an <code>Applicative</code> computation is fixed, whereas the structure of a <code>Monad</code> computation can change based on intermediate results. |

||

| + | Intuitively, it is this ability to use the output from previous computations to decide what computations to run next that makes <code>Monad</code> more powerful than <code>Applicative</code>. The structure of an <code>Applicative</code> computation is fixed, whereas the structure of a <code>Monad</code> computation can change based on intermediate results. This also means that parsers built using an <code>Applicative</code> interface can only parse context-free languages; in order to parse context-sensitive languages a <code>Monad</code> interface is needed.{{noteref}} |

||

To see the increased power of <code>Monad</code> from a different point of view, let’s see what happens if we try to implement <code>(>>=)</code> in terms of <code>fmap</code>, <code>pure</code>, and <code>(<*>)</code>. We are given a value <code>x</code> of type <code>m a</code>, and a function <code>k</code> of type <code>a -> m b</code>, so the only thing we can do is apply <code>k</code> to <code>x</code>. We can’t apply it directly, of course; we have to use <code>fmap</code> to lift it over the <code>m</code>. But what is the type of <code>fmap k</code>? Well, it’s <code>m a -> m (m b)</code>. So after we apply it to <code>x</code>, we are left with something of type <code>m (m b)</code>—but now we are stuck; what we really want is an <code>m b</code>, but there’s no way to get there from here. We can ''add'' <code>m</code>’s using <code>pure</code>, but we have no way to ''collapse'' multiple <code>m</code>’s into one. |

To see the increased power of <code>Monad</code> from a different point of view, let’s see what happens if we try to implement <code>(>>=)</code> in terms of <code>fmap</code>, <code>pure</code>, and <code>(<*>)</code>. We are given a value <code>x</code> of type <code>m a</code>, and a function <code>k</code> of type <code>a -> m b</code>, so the only thing we can do is apply <code>k</code> to <code>x</code>. We can’t apply it directly, of course; we have to use <code>fmap</code> to lift it over the <code>m</code>. But what is the type of <code>fmap k</code>? Well, it’s <code>m a -> m (m b)</code>. So after we apply it to <code>x</code>, we are left with something of type <code>m (m b)</code>—but now we are stuck; what we really want is an <code>m b</code>, but there’s no way to get there from here. We can ''add'' <code>m</code>’s using <code>pure</code>, but we have no way to ''collapse'' multiple <code>m</code>’s into one. |

||

| − | {{note|1=You might hear some people claim |

+ | {{note|1=You might hear some people claim that the definition in terms of <code>return</code>, <code>fmap</code>, and <code>join</code> is the “math definition” and the definition in terms of <code>return</code> and <code>(>>=)</code> is something specific to Haskell. In fact, both definitions were known in the mathematics community long before Haskell picked up monads.}} |

This ability to collapse multiple <code>m</code>’s is exactly the ability provided by the function <code>join :: m (m a) -> m a</code>, and it should come as no surprise that an alternative definition of <code>Monad</code> can be given in terms of <code>join</code>: |

This ability to collapse multiple <code>m</code>’s is exactly the ability provided by the function <code>join :: m (m a) -> m a</code>, and it should come as no surprise that an alternative definition of <code>Monad</code> can be given in terms of <code>join</code>: |

||

| Line 440: | Line 563: | ||

</haskell> |

</haskell> |

||

| − | In fact, the |

+ | In fact, the canonical definition of monads in category theory is in terms of <code>return</code>, <code>fmap</code>, and <code>join</code> (often called <math>\eta</math>, <math>T</math>, and <math>\mu</math> in the mathematical literature). Haskell uses an alternative formulation with <code>(>>=)</code> instead of <code>join</code> since it is more convenient to use {{noteref}}. However, sometimes it can be easier to think about <code>Monad</code> instances in terms of <code>join</code>, since it is a more “atomic” operation. (For example, <code>join</code> for the list monad is just <code>concat</code>.) |

{{Exercises| |

{{Exercises| |

||

| Line 449: | Line 572: | ||

==Utility functions== |

==Utility functions== |

||

| − | The [ |

+ | The [{{HackageDocs|base|Control-Monad}} <code>Control.Monad</code>] module provides a large number of convenient utility functions, all of which can be implemented in terms of the basic <code>Monad</code> operations (<code>return</code> and <code>(>>=)</code> in particular). We have already seen one of them, namely, <code>join</code>. We also mention some other noteworthy ones here; implementing these utility functions oneself is a good exercise. For a more detailed guide to these functions, with commentary and example code, see Henk-Jan van Tuyl’s [http://members.chello.nl/hjgtuyl/tourdemonad.html tour]. |

| + | * <code>liftM :: Monad m => (a -> b) -> m a -> m b</code>. This should be familiar; of course, it is just <code>fmap</code>. The fact that we have both <code>fmap</code> and <code>liftM</code> is a consequence of the fact that the <code>Monad</code> type class did not require a <code>Functor</code> instance until recently, even though mathematically speaking, every monad is a functor. If you are using GHC 7.10 or newer, you should avoid using <code>liftM</code> and just use <code>fmap</code> instead. |

||

| − | {{note|Still, it is unclear how this "bug" should be fixed. Making <code>Monad</code> require a <code>Functor</code> instance has some drawbacks, as mentioned in this [http://www.haskell.org/pipermail/haskell-prime/2011-January/003312.html 2011 mailing-list discussion]. —Geheimdienst}} |

||

| − | |||

| − | * <code>liftM :: Monad m => (a -> b) -> m a -> m b</code>. This should be familiar; of course, it is just <code>fmap</code>. The fact that we have both <code>fmap</code> and <code>liftM</code> is an unfortunate consequence of the fact that the <code>Monad</code> type class does not require a <code>Functor</code> instance, even though mathematically speaking, every monad is a functor. However, <code>fmap</code> and <code>liftM</code> are essentially interchangeable, since it is a bug (in a social rather than technical sense) for any type to be an instance of <code>Monad</code> without also being an instance of <code>Functor</code> {{noteref}}. |

||

* <code>ap :: Monad m => m (a -> b) -> m a -> m b</code> should also be familiar: it is equivalent to <code>(<*>)</code>, justifying the claim that the <code>Monad</code> interface is strictly more powerful than <code>Applicative</code>. We can make any <code>Monad</code> into an instance of <code>Applicative</code> by setting <code>pure = return</code> and <code>(<*>) = ap</code>. |

* <code>ap :: Monad m => m (a -> b) -> m a -> m b</code> should also be familiar: it is equivalent to <code>(<*>)</code>, justifying the claim that the <code>Monad</code> interface is strictly more powerful than <code>Applicative</code>. We can make any <code>Monad</code> into an instance of <code>Applicative</code> by setting <code>pure = return</code> and <code>(<*>) = ap</code>. |

||

| − | * <code>sequence :: Monad m => [m a] -> m [a]</code> takes a list of computations and combines them into one computation which collects a list of their results. It is again something of a historical accident that <code>sequence</code> has a <code>Monad</code> constraint, since it can actually be implemented only in terms of <code>Applicative</code>. |

+ | * <code>sequence :: Monad m => [m a] -> m [a]</code> takes a list of computations and combines them into one computation which collects a list of their results. It is again something of a historical accident that <code>sequence</code> has a <code>Monad</code> constraint, since it can actually be implemented only in terms of <code>Applicative</code> (see the exercise at the end of the Utility Functions section for Applicative). Note that the actual type of <code>sequence</code> is more general, and works over any <code>Traversable</code> rather than just lists; see the [[#Traversable|section on <code>Traversable</code>]]. |

| − | |||

| − | * <code>replicateM :: Monad m => Int -> m a -> m [a]</code> is simply a combination of [http://haskell.org/ghc/docs/latest/html/libraries/base/Prelude.html#v:replicate <code>replicate</code>] and <code>sequence</code>. |

||

| − | * <code> |

+ | * <code>replicateM :: Monad m => Int -> m a -> m [a]</code> is simply a combination of [{{HackageDocs|base|Prelude}}#v:replicate <code>replicate</code>] and <code>sequence</code>. |

| − | * <code>mapM :: Monad m => (a -> m b) -> [a] -> m [b]</code> maps its first argument over the second, and <code>sequence</code>s the results. The <code>forM</code> function is just <code>mapM</code> with its arguments reversed; it is called <code>forM</code> since it models generalized <code>for</code> loops: the list <code>[a]</code> provides the loop indices, and the function <code>a -> m b</code> specifies the “body” of the loop for each index. |

+ | * <code>mapM :: Monad m => (a -> m b) -> [a] -> m [b]</code> maps its first argument over the second, and <code>sequence</code>s the results. The <code>forM</code> function is just <code>mapM</code> with its arguments reversed; it is called <code>forM</code> since it models generalized <code>for</code> loops: the list <code>[a]</code> provides the loop indices, and the function <code>a -> m b</code> specifies the “body” of the loop for each index. Again, these functions actually work over any <code>Traversable</code>, not just lists, and they can also be defined in terms of <code>Applicative</code>, not <code>Monad</code>: the analogue of <code>mapM</code> for <code>Applicative</code> is called <code>traverse</code>. |

* <code>(=<<) :: Monad m => (a -> m b) -> m a -> m b</code> is just <code>(>>=)</code> with its arguments reversed; sometimes this direction is more convenient since it corresponds more closely to function application. |

* <code>(=<<) :: Monad m => (a -> m b) -> m a -> m b</code> is just <code>(>>=)</code> with its arguments reversed; sometimes this direction is more convenient since it corresponds more closely to function application. |

||

* <code>(>=>) :: Monad m => (a -> m b) -> (b -> m c) -> a -> m c</code> is sort of like function composition, but with an extra <code>m</code> on the result type of each function, and the arguments swapped. We’ll have more to say about this operation later. There is also a flipped variant, <code>(<=<)</code>. |

* <code>(>=>) :: Monad m => (a -> m b) -> (b -> m c) -> a -> m c</code> is sort of like function composition, but with an extra <code>m</code> on the result type of each function, and the arguments swapped. We’ll have more to say about this operation later. There is also a flipped variant, <code>(<=<)</code>. |

||

| − | |||

| − | * The <code>guard</code> function is for use with instances of <code>MonadPlus</code>, which is discussed at the end of the [[#Monoid|<code>Monoid</code> section]]. |

||

Many of these functions also have “underscored” variants, such as <code>sequence_</code> and <code>mapM_</code>; these variants throw away the results of the computations passed to them as arguments, using them only for their side effects. |

Many of these functions also have “underscored” variants, such as <code>sequence_</code> and <code>mapM_</code>; these variants throw away the results of the computations passed to them as arguments, using them only for their side effects. |

||

| − | Other monadic functions which are occasionally useful include <code>filterM</code>, <code>zipWithM</code>, <code>foldM</code>, and <code>forever</code>. |

+ | Other monadic functions which are occasionally useful include <code>filterM</code>, <code>zipWithM</code>, <code>foldM</code>, and <code>forever</code>. |

==Laws== |

==Laws== |

||

| Line 483: | Line 600: | ||

m >>= return = m |

m >>= return = m |

||

m >>= (\x -> k x >>= h) = (m >>= k) >>= h |

m >>= (\x -> k x >>= h) = (m >>= k) >>= h |

||

| − | |||

| − | fmap f xs = xs >>= return . f = liftM f xs |

||

</haskell> |

</haskell> |

||

| − | The first and second laws express the fact that <code>return</code> behaves nicely: if we inject a value <code>a</code> into a monadic context with <code>return</code>, and then bind to <code>k</code>, it is the same as just applying <code>k</code> to <code>a</code> in the first place; if we bind a computation <code>m</code> to <code>return</code>, nothing changes. The third law essentially says that <code>(>>=)</code> is associative, sort of |

+ | The first and second laws express the fact that <code>return</code> behaves nicely: if we inject a value <code>a</code> into a monadic context with <code>return</code>, and then bind to <code>k</code>, it is the same as just applying <code>k</code> to <code>a</code> in the first place; if we bind a computation <code>m</code> to <code>return</code>, nothing changes. The third law essentially says that <code>(>>=)</code> is associative, sort of. |

| − | {{note|I like to pronounce this operator “fish” |

+ | {{note|I like to pronounce this operator “fish”.}} |

However, the presentation of the above laws, especially the third, is marred by the asymmetry of <code>(>>=)</code>. It’s hard to look at the laws and see what they’re really saying. I prefer a much more elegant version of the laws, which is formulated in terms of <code>(>=>)</code> {{noteref}}. Recall that <code>(>=>)</code> “composes” two functions of type <code>a -> m b</code> and <code>b -> m c</code>. You can think of something of type <code>a -> m b</code> (roughly) as a function from <code>a</code> to <code>b</code> which may also have some sort of effect in the context corresponding to <code>m</code>. <code>(>=>)</code> lets us compose these “effectful functions”, and we would like to know what properties <code>(>=>)</code> has. The monad laws reformulated in terms of <code>(>=>)</code> are: |

However, the presentation of the above laws, especially the third, is marred by the asymmetry of <code>(>>=)</code>. It’s hard to look at the laws and see what they’re really saying. I prefer a much more elegant version of the laws, which is formulated in terms of <code>(>=>)</code> {{noteref}}. Recall that <code>(>=>)</code> “composes” two functions of type <code>a -> m b</code> and <code>b -> m c</code>. You can think of something of type <code>a -> m b</code> (roughly) as a function from <code>a</code> to <code>b</code> which may also have some sort of effect in the context corresponding to <code>m</code>. <code>(>=>)</code> lets us compose these “effectful functions”, and we would like to know what properties <code>(>=>)</code> has. The monad laws reformulated in terms of <code>(>=>)</code> are: |

||

| Line 501: | Line 616: | ||

{{note|As fans of category theory will note, these laws say precisely that functions of type <code>a -> m b</code> are the arrows of a category with <code>(>{{=}}>)</code> as composition! Indeed, this is known as the ''Kleisli category'' of the monad <code>m</code>. It will come up again when we discuss <code>Arrow</code>s.}} |

{{note|As fans of category theory will note, these laws say precisely that functions of type <code>a -> m b</code> are the arrows of a category with <code>(>{{=}}>)</code> as composition! Indeed, this is known as the ''Kleisli category'' of the monad <code>m</code>. It will come up again when we discuss <code>Arrow</code>s.}} |

||

| − | Ah, much better! The laws simply state that <code>return</code> is the identity of <code>(>=>)</code>, and that <code>(>=>)</code> is associative {{noteref}} |

+ | Ah, much better! The laws simply state that <code>return</code> is the identity of <code>(>=>)</code>, and that <code>(>=>)</code> is associative {{noteref}}. |

There is also a formulation of the monad laws in terms of <code>fmap</code>, <code>return</code>, and <code>join</code>; for a discussion of this formulation, see the Haskell [http://en.wikibooks.org/wiki/Haskell/Category_theory wikibook page on category theory]. |

There is also a formulation of the monad laws in terms of <code>fmap</code>, <code>return</code>, and <code>join</code>; for a discussion of this formulation, see the Haskell [http://en.wikibooks.org/wiki/Haskell/Category_theory wikibook page on category theory]. |

||

| + | |||

| + | {{Exercises| |

||

| + | # Given the definition <code>g >{{=}}> h {{=}} \x -> g x >>{{=}} h</code>, prove the equivalence of the above laws and the usual monad laws. |

||

| + | }} |

||

==<code>do</code> notation== |

==<code>do</code> notation== |

||

| Line 519: | Line 638: | ||

<haskell> |

<haskell> |

||

| − | do { x <- a |

+ | do { x <- a |

| − | + | ; b |

|

| − | + | ; y <- c |

|

| − | d |

+ | ; d |

} |

} |

||

</haskell> |

</haskell> |

||

| Line 542: | Line 661: | ||

</haskell> |

</haskell> |

||

| − | but what happens if <code>foo</code> |

+ | but what happens if <code>foo</code> is an empty list? Well, remember that ugly <code>fail</code> function in the <code>Monad</code> type class declaration? That’s what happens. See [http://www.haskell.org/onlinereport/exps.html#sect3.14 section 3.14 of the Haskell Report] for the full details. See also the discussion of <code>MonadPlus</code> and <code>MonadZero</code> in the [[#Other monoidal classes: Alternative, MonadPlus, ArrowPlus|section on other monoidal classes]]. |

A final note on intuition: <code>do</code> notation plays very strongly to the “computational context” point of view rather than the “container” point of view, since the binding notation <code>x <- m</code> is suggestive of “extracting” a single <code>x</code> from <code>m</code> and doing something with it. But <code>m</code> may represent some sort of a container, such as a list or a tree; the meaning of <code>x <- m</code> is entirely dependent on the implementation of <code>(>>=)</code>. For example, if <code>m</code> is a list, <code>x <- m</code> actually means that <code>x</code> will take on each value from the list in turn. |

A final note on intuition: <code>do</code> notation plays very strongly to the “computational context” point of view rather than the “container” point of view, since the binding notation <code>x <- m</code> is suggestive of “extracting” a single <code>x</code> from <code>m</code> and doing something with it. But <code>m</code> may represent some sort of a container, such as a list or a tree; the meaning of <code>x <- m</code> is entirely dependent on the implementation of <code>(>>=)</code>. For example, if <code>m</code> is a list, <code>x <- m</code> actually means that <code>x</code> will take on each value from the list in turn. |

||

| + | Sometimes, the full power of <code>Monad</code> is not needed to desugar <code>do</code>-notation. For example, |

||

| − | ==MonadFix== |

||

| + | <haskell> |

||

| − | The <code>MonadFix</code> class describes monads which support the special fixpoint operation <code>mfix :: (a -> m a) -> m a</code>, which allows the output of monadic computations to be defined via recursion. This is supported in GHC and Hugs by a special “recursive do” notation, <code>mdo</code>. For more information, see Levent Erkök’s thesis, [http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.15.1543&rep=rep1&type=pdf Value Recursion in Monadic Computations]. |

||

| + | do x <- foo1 |

||

| + | y <- foo2 |

||

| + | z <- foo3 |

||

| + | return (g x y z) |

||

| + | </haskell> |

||

| + | |||

| + | would normally be desugared to <code>foo1 >>= \x -> foo2 >>= \y -> foo3 >>= \z -> return (g x y z)</code>, but this is equivalent to <code>g <$> foo1 <*> foo2 <*> foo3</code>. With the <code>ApplicativeDo</code> extension enabled (as of GHC 8.0), GHC tries hard to desugar <code>do</code>-blocks using <code>Applicative</code> operations wherever possible. This can sometimes lead to efficiency gains, even for types which also have <code>Monad</code> instances, since in general <code>Applicative</code> computations may be run in parallel, whereas monadic ones may not. For example, consider |

||

| + | |||

| + | <haskell> |

||

| + | g :: Int -> Int -> M Int |

||

| + | |||

| + | -- These could be expensive |

||

| + | bar, baz :: M Int |

||

| + | |||

| + | foo :: M Int |

||

| + | foo = do |

||

| + | x <- bar |

||

| + | y <- baz |

||

| + | g x y |

||

| + | </haskell> |

||

| + | |||

| + | <code>foo</code> definitely depends on the <code>Monad</code> instance of <code>M</code>, since the effects generated by the whole computation may depend (via <code>g</code>) on the <code>Int</code> outputs of <code>bar</code> and <code>baz</code>. Nonetheless, with <code>ApplicativeDo</code> enabled, <code>foo</code> can be desugared as |

||

| + | <haskell> |

||

| + | join (g <$> bar <*> baz) |

||

| + | </haskell> |

||

| + | which may allow <code>bar</code> and <code>baz</code> to be computed in parallel, since they at least do not depend on each other. |

||

| + | |||

| + | The <code>ApplicativeDo</code> extension is described in [https://ghc.haskell.org/trac/ghc/wiki/ApplicativeDo this wiki page], and in more detail in [http://doi.org/10.1145/2976002.2976007 this Haskell Symposium paper]. |

||

==Further reading== |

==Further reading== |

||

Philip Wadler was the first to propose using monads to structure functional programs. [http://homepages.inf.ed.ac.uk/wadler/topics/monads.html His paper] is still a readable introduction to the subject. |

Philip Wadler was the first to propose using monads to structure functional programs. [http://homepages.inf.ed.ac.uk/wadler/topics/monads.html His paper] is still a readable introduction to the subject. |

||

| − | |||

| − | Much of the monad transformer library [http://hackage.haskell.org/package/mtl mtl], including the <code>Reader</code>, <code>Writer</code>, <code>State</code>, and other monads, as well as the monad transformer framework itself, was inspired by Mark Jones’s classic paper [http://web.cecs.pdx.edu/~mpj/pubs/springschool.html Functional Programming with Overloading and Higher-Order Polymorphism]. It’s still very much worth a read—and highly readable—after almost fifteen years. |

||

{{note|1= |

{{note|1= |

||

| − | [ |

+ | [[All About Monads]], |

| − | [http://haskell.org/haskellwiki/Monads_as_Containers Monads as containers], |

+ | [http://www.haskell.org/haskellwiki/Monads_as_Containers Monads as containers], |

| − | [http://en.wikibooks.org/w/index.php?title=Haskell/Understanding_monads |

+ | [http://en.wikibooks.org/w/index.php?title=Haskell/Understanding_monads Understanding monads], |

[[The Monadic Way]], |

[[The Monadic Way]], |

||

[http://blog.sigfpe.com/2006/08/you-could-have-invented-monads-and.html You Could Have Invented Monads! (And Maybe You Already Have.)], |

[http://blog.sigfpe.com/2006/08/you-could-have-invented-monads-and.html You Could Have Invented Monads! (And Maybe You Already Have.)], |

||

| Line 565: | Line 710: | ||

[http://kawagner.blogspot.com/2007/02/understanding-monads-for-real.html Understanding Monads. For real.], |

[http://kawagner.blogspot.com/2007/02/understanding-monads-for-real.html Understanding Monads. For real.], |

||

[http://www.randomhacks.net/articles/2007/03/12/monads-in-15-minutes Monads in 15 minutes: Backtracking and Maybe], |