Difference between revisions of "User:Michiexile/MATH198/Lecture 5"

Michiexile (talk | contribs) |

Michiexile (talk | contribs) |

||

| Line 234: | Line 234: | ||

Dually, we get the ''coequalizer'' as the colimit of the equalizer diagram. |

Dually, we get the ''coequalizer'' as the colimit of the equalizer diagram. |

||

| + | |||

| + | A coequalizer |

||

| + | [[Image:CoequalizerCone.png]] |

||

| + | has to fulfill that <math>i_Bf = i_A = i_Bg</math>. Thus, writing <math>q=i_B</math>, we get an object (actually, an epimorphism out of <math>B</math>) with a map such that <math>f</math> and <math>g</math> both hit things that <math>q</math> identifies. |

||

| + | |||

| + | So, assuming we work with sets, we find that what a coequalizer of two functions is, is a projection onto a quotient set, such that <math>i_A(x) = i_A(y)</math> exactly if <math>f(x) = f(y)</math>. |

||

=====Pushout and pullback squares===== |

=====Pushout and pullback squares===== |

||

| Line 244: | Line 250: | ||

# Prove that in a CCC, the composition <math>\lambda \circ ev</math> is <math>\lambda\circ ev = 1_{[A\to B]}: [A\to B] \to [A\to B]</math>. |

# Prove that in a CCC, the composition <math>\lambda \circ ev</math> is <math>\lambda\circ ev = 1_{[A\to B]}: [A\to B] \to [A\to B]</math>. |

||

# Prove that an equalizer is a monomorphism. |

# Prove that an equalizer is a monomorphism. |

||

| + | # Prove that a coequalizer is an epimorphism. |

||

# What is the limit of a diagram of the shape of the category 2? |

# What is the limit of a diagram of the shape of the category 2? |

||

# * Implement a typed lambda calculus as an EDSL in Haskell. |

# * Implement a typed lambda calculus as an EDSL in Haskell. |

||

Revision as of 22:00, 21 October 2009

IMPORTANT NOTE: THESE NOTES ARE STILL UNDER DEVELOPMENT. PLEASE WAIT UNTIL AFTER THE LECTURE WITH HANDING ANYTHING IN, OR TREATING THE NOTES AS READY TO READ.

Cartesian Closed Categories and typed lambda-calculus

A category is said to have pairwise products if for any objects , there is a product object .

A category is said to have pairwise coproducts if for any objects , there is a coproduct object .

Recall when we talked about internal homs in Lecture 2. We can now define what we mean, formally, by the concept:

Definition An object in a category is an internal hom object or an exponential object or if it comes equipped with an arrow , called the evaluation arrow, such that for any other arrow , there is a unique arrow such that the composite

is .

The idea here is that with something in an exponential object, and something in the source of the arrows we imagine live inside the exponential, we can produce the evaluation of the arrow at the source to produce something in the target. Using global elements, this reasoning comes through in a more natural manner: given and we can produce the global element . Furthermore, we can always produce something in the exponential whenever we have something that looks as if it should be there.

And with this we can define

Definition A category is a Cartesian Closed Category or a CCC if:

- has a terminal object

- Each pair of objects has a product and projections , .

- For every pair of objects, there is an exponential object with an evaluation map .

Currying

Note that the exponential as described here is exactly what we need in order to discuss the Haskell concept of multi-parameter functions. If we consider the type of a binary function in Haskell:

binFunction :: a -> a -> a

This function really lives in the Haskell type a -> (a -> a), and thus is an element in the repeated exponential object . Evaluating once gives us a single-parameter function, the first parameter consumed by the first evaluation, and we can evaluate a second time, feeding in the second parameter to get an end result from the function.

On the other hand, we can feed in both values at once, and get

binFunction' :: (a,a) -> a

which lives in the exponential object .

These are genuinely different objects, but they seem to do the same thing: consume two distinct values to produce a third value. The resolution of the difference lies, again, in a recognition from Set theory: there is an isomorphism

which we can use as inspiration for an isomorphism valid in Cartesian Closed Categories.

Typed lambda-calculus

The lambda-calculus, and later the typed lambda-calculus both act as foundational bases for computer science, and computer programming in particular. The idea in both is that everything is a function, and we can reduce the act of programming to function application; which in turn can be analyzed using expression rewriting rules that encapsulate the act of computation in a sequence of formal rewrites.

Definition A typed lambda-calculus is a formal theory with types, terms, variables and equations. Each term has a type associated to it, and we write or . The system is subject to a sequence of rules:

- There is a type . Hence, the empty lambda calculus is excluded.

- If are types, then so are and . These are, initially, just additional symbols, not imbued with the associations we usually give the symbols used.

- There is a term . Hence, the lambda calculus without any terms is excluded.

- For each type , there is an infinite (countable) supply of terms .

- If are terms, then there is a term .

- If then there are terms .

- If And , then there is a term .

- If is a variable and is a term, then there is a . Note that here, is a meta-expression, meaning we have SOME lambda-calculus expression that may include the variable .

- There is a relation for each set of variables that occur freely in either or . This relation is reflexive, symmetric and transitive. Recall that a variable is free in a term if it is not in the scope of a -expression naming that variable.

- If then . In other words, up to lambda-calculus equality, there is only one value of type .

- If , then implies . Binding more variables gives less freedom, not more, and thus cannot suddenly make equal expressions differ.

- implies .

- implies . So equality plays nice with function application.

- implies . Equality behaves well with respect to binding variables.

- , , for all .

- if is substitutable for in and is what we get by substituting each occurrence of by in . A term is substitutable for another if by performing the substitution, no occurrence of any variable in the term becomes bound,

- .

- if is substitutable for in and each variable is not free in the other expression.

Note that is just a symbol. The axioms above give it properties that work a lot like equality, but two lambda calculus-equal terms are not equal unless they are identical. However, tells us that in any model of this lambda calculus - where terms, types, et.c. are replaced with actual things (mathematical objects, say, or a programming language semantics embedding typed lambda calculus) - then the things given by translating and into the model should end up being equal.

Any actual realization of typed lambda calculus is bound to have more rules and equalities than the ones listed here.

With these axioms in front of us, however, we can see how lambda calculus and Cartesian Closed Categories fit together: We can go back and forth between the wo concepts in a natural manner:

Lambda to CCC

Given a typed lambda calculus , we can define a CCC . Its objects are the types of . An arrow from to is an equivalence class (under ) of terms of type , free in a single variable .

We need the equivalence classes because for any variable , we want to be the global element of corresponding to the identity arrow. Hence, that variable must itself correspond to an identity arrow.

And then the rules for the various constructions enumerated in the axioms correspond closely to what we need to prove the resulting category to be cartesian closed.

CCC to Lambda

To go in the other direction, starting out with a Cartesian Closed Category and finding a typed lambda calculus corresponding to it, we construct its internal language.

Given a CCC , we can assume that we have chosen, somehow, one actual product for each finite set of factors. Thus, both all products and all projections are well defined entities, with no remaining choice to determine them.

The types of the internal language are just the objects of . The existence of products, exponentials and terminal object covers axioms 1-2. We can assume the existence of variables for each type, and the remaining axioms correspond to definition and behaviour of the terms available.

Using the properties of a CCC, it is at this point possible to prove a resulting equivalence of categories , and similarly, with suitable definitions for what it means for formal languages to be equivalent, one can also prove for a typed lambda-calculus that .

More on this subject can be found in:

- Lambek & Scott: Aspects of higher order categorical logic and Introduction to higher order categorical logic

More importantly, by stating -calculus in terms of a CCC instead of in terms of terms and rewriting rules is that you can escape worrying about variable clashes, alpha reductions and composability - the categorical translation ignores, at least superficially, the variables, reduces terms with morphisms that have equality built in, and provides associative composition for free.

At this point, I'd recommend reading more on Wikipedia [1] and [2], as well as in Lambek & Scott: Introduction to Higher Order Categorical Logic. The book by Lambek & Scott goes into great depth on these issues, but may be less than friendly to a novice.

Limits and colimits

One design pattern, as it were, that we have seen occur over and over in the definitions we've seen so far is for there to be some object, such that for every other object around, certain morphisms have unique existence.

We saw it in terminal and initial objects, where there's a unique map from or to every other object. And in products/coproducts where a wellbehaved map, capturing any pair of maps has unique existence. And finally, above, in the CCC characterization of the internal hom, we had a similar uniqueness requirement for the lambda map.

One thing we can notice is that the isomorphisms theorems for all these cases look very similar to each other: in each isomorphism proof, we produce the uniquely existing morphisms, and prove that their uniqueness and their other properties force the maps to really be isomorphisms.

Now, category theory has a philosophy slightly similar to design patterns - if we see something happening over and over, we'll want to generalize it. And there are generalizations available for these!

Diagrams, cones and limits

Definition A diagram of the shape of an index category (often finite or countable), in a category is just a functor . Objects in will be denoted by and their images in by .

This underlines that when we talk about diagrams, we tend to think of them less as just functors, and more as their images - the important part of a diagram is the objects and their layout in , and not the process of going to from .

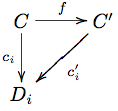

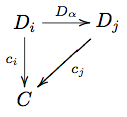

Definition A cone over a diagram in a category is some object equipped with a family of arrows, one for each object in , such that for each arrow in , the following diagram

commutes, or in equations, .

commutes, or in equations, .

A morphism of cones is an arrow such that each triangle

commutes, or in equations, such that .

commutes, or in equations, such that .

This defins a category of cones, that we shall denote by . And we define, hereby:

Definition The limit of a diagram in a category is a terminal object in . We often denote a limit by

so that the map from the limit object to one of the diagram objects is denoted by .

The limit being terminal in the category of cones nails down once and for all the uniqueness of any map into it, and the isomorphism of any two terminal objects carries over to a proof once and for all for the limit case.

Specifically, since the morphisms of cones are morphisms in , and composition is carried straight over, so proving a map is an isomorphism in the cone category implies it is one in the target category as well.

Definition A category has all (finite) limits if all diagrams (of finite shape) have limit objects defined for them.

Limits we've already seen

The terminal object of a category is the limit object of an empty diagram. Indeed, it is an object, with no specified maps to no other objects, such that every other object that also maps to the same empty set of objects - which is to say all other objects - have a uniquely determined map to the limit object.

The product of some set of objects it she limit object of the diagram containing all these objects and no arrows; a diagram of the shape of a discrete category. The condition here becomes the requirement of maps to all factors so any other cone factors through these maps.

To express the exponential as a limit, we need to go to a different category than the one we started in. Take the category with objects given by morphisms for fixed objects , and morphisms given by morphisms commuting with the 'objects' they run between and fixing . The exponential is a terminal object in this category.

Adding further arrows to diagrams amounts to adding further conditions on the products, as the maps from the product to the diagram objects need to factor through any arrows present in the diagram.

These added relations, however, is exactly what trips things up in Haskell. The idealized Haskell category does not have even all finite limits. At the core of the issue here is the lack of dependent types: there is no way for the type system to guarantee equations, and hence only the trivial limits - the products - can be guaranteed by the Haskell type checked.

In order to get that kind of guarantees, the type checker would need an implementation of Dependent type, something that can be simulated in several ways, but is not (yet) an actual part of Haskell. Other languages, however, cover this - most notably Epigram, Agda and Cayenne - which the latter is much stronger influenced by constructve type theory and category theory even than Haskell.

The kind of equations that show up in a limit, however, could be thought of as invariants for the type - and thus something that can be tested for. The resulting equations can be plugged into a testing framework - such as QuickCheck to verify that the invariants hold under the functions applied.

Colimits

The dual concept to a limit is defined using the dual to the cones:

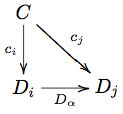

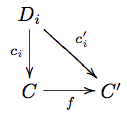

Definition A cocone over a diagram is an object with arrows such that for each arrow in , the following diagram

commutes, or in equations, such that .

commutes, or in equations, such that .

A morphism of cocones is an arrow such that each triangle

commutes, or in equations, such that .

commutes, or in equations, such that .

Just as with the category of cones, this yields a category of cocones, that we denote by , and with this we define:

Definition The colimit of a diagram is an initial object in .

We denote the colimit by

so that the map from one of the diagram objects to the colimit object is denoted by .

Again, the isomorphism results for coproducts and initial objects follow from that for the colimit, and the same proof ends up working for all colimits.

And again, we say that a category has (finite) colimits if every (finite) diagram admits a colimit.

Colimits we've already seen

The initial object is the colimit of the empty diagram.

The coproduct is the colimit of the discrete diagram.

For both of these, the argument is almost identical to the one in the limits section above.

Useful limits and colimits

With the tools of limits and colimits at hand, we can start using these to introduce more category theoretical constructions - and some of these turn out to correspond to things we've seen in other areas.

Possibly among the most important are the equalizers and coequalizers (with kernel (nullspace) and images as special cases), and the pullbacks and pushouts (with which we can make explicit the idea of inverse images of functions).

One useful theorem to know about is:

Theorem The following are equivalent for a category :

- has all finite limits.

- has all finite products and all equalizers.

- has all pullbacks and a terminal object.

Also, the following dual statements are equivalent:

- has all finite colimits.

- has all finite coproducts and all coequalizers.

- has all pushouts and an initial object.

For this theorem, we can replace finite with any other cardinality in every place it occurs, and we will still get a valid theorem.

Equalizer, coequalizer

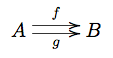

Consider the equalizer diagram:

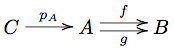

A limit over this diagram is an object and arrows to all diagram objects. The commutativity conditions for the arrows defined force for us , and thus, keeping this enforced equation in mind, we can summarize the cone diagram as:

Now, the limit condition tells us that this is the least restrictive way we can map into with some map such that , in that every other way we could map in that way will factor through this way.

As usual, it is helpful to consider the situation in Set to make sense of any categorical definition: and the situation there is helped by the generalized element viewpoint: the limit object is one representative of a subobject of that for the case of Set contains all .

Hence the word we use for this construction: the limit of the diagram above is the equalizer of . It captures the idea of a maximal subset unable to distinguish two given functions, and it introduces a categorical way to define things by equations we require them to respect.

One important special case of the equalizer is the kernel: in a category with a null object, we have a distinguished, unique, member of any homset given by the compositions of the unique arrows to and from the null object. We define the kernel of an arrow to be the equalizer of . Keeping in mind the arrow-centric view on categories, we tend to denot the arrow from to the source of by .

In the category of vector spaces, and linear maps, the map really is the constant map taking the value everywhere. And the kernel of a linear map is the equalizer of . Thus it is some vector space with a map such that , and any other map that fulfills this condition factors through . Certainly the vector space fulfills the requisite condition, nothing larger will do, since then the map composition wouldn't be 0, and nothing smaller will do, since then the maps factoring this space through the smaller candidate would not be unique.

Hence, just like we might expect.

Dually, we get the coequalizer as the colimit of the equalizer diagram.

A coequalizer File:CoequalizerCone.png has to fulfill that . Thus, writing , we get an object (actually, an epimorphism out of ) with a map such that and both hit things that identifies.

So, assuming we work with sets, we find that what a coequalizer of two functions is, is a projection onto a quotient set, such that exactly if .

Pushout and pullback squares

- Computer science applications

Homework

- Prove that currying/uncurrying are isomorphisms in a CCC. Hint: the map is a map .

- Prove that in a CCC, the composition is .

- Prove that an equalizer is a monomorphism.

- Prove that a coequalizer is an epimorphism.

- What is the limit of a diagram of the shape of the category 2?

- * Implement a typed lambda calculus as an EDSL in Haskell.