User:Michiexile/MATH198/Lecture 8

Algebras over monads

We recall from the last lecture the definition of an Eilenberg-Moore algebra over a monad :

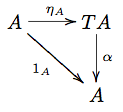

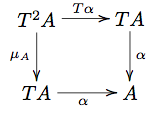

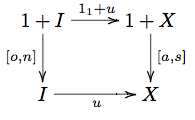

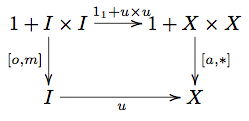

Definition An algebra over a monad in a category (a -algebra) is a morphism , such that the diagrams below both commute:

While a monad corresponds to the imposition of some structure on the objects in a category, an algebra over that monad corresponds to some evaluation of that structure.

Example: monoids

Let be the Kleene star monad - the one we get from the adjunction of free and forgetful functors between Monoids and Sets. Then a -algebra on a set is equivalent to a monoid structure on .

Indeed, if we have a monoid structure on , given by and , we can construct a -algebra by

This gives us, indeed, a -algebra structure on . Associativity and unity follows from the corresponding properties in the monoid.

On the other hand, if we have a -algebra structure on , we can construct a monoid structure by setting

It is clear that associativity of follows from the associativity of , and unitality of follows from the unitality of .

Example: Vector spaces

We have free and forgetful functors

forming an adjoint pair; where the free functor takes a set and returns the vector space with basis ; while the forgetful functor takes a vector space and returns the set of all its elements.

The composition of these yields a monad in taking a set to the set of all formal linear combinations of elements in . The monad multiplication takes formal linear combinations of formal linear combinations and multiplies them out:

A -algebra is a map that acts like a vector space in the sense that .

We can define and . The operations thus defined are associative, distributive, commutative, and everything else we could wish for in order to define a vector space - precisely because the operations inside are, and is associative.

The moral behind these examples is that using monads and monad algebras, we have significant power in defining and studying algebraic structures with categorical and algebraic tools. This paradigm ties in closely with the theory of operads - which has its origins in topology, but has come to good use within certain branches of universal algebra.

An (non-symmetric) operad is a graded set equipped with composition operations that obey certain unity and associativity conditions. As it turns out, non-symmetric operads correspond to the summands in a monad with polynomial underlying functor, and from a non-symmetric operad we can construct a corresponding monad.

The designator non-symmetric floats in this text o avoid dealing with the slightly more general theory of symmetric operads - which allow us to resort the input arguments, thus including the symmetrizer of a symmetric monoidal category in the entire definition.

To read more about these correspondences, I can recommend you start with: the blog posts Monads in Mathematics here: [1]

Algebras over endofunctors

Suppose we started out with an endofunctor that is not the underlying functor of a monad - or an endofunctor for which we don't want to settle on a monadic structure. We can still do a lot of the Eilenberg-Moore machinery on this endofunctor - but we don't get quite the power of algebraic specification that monads offer us. At the core, here, lies the lack of associativity for a generic endofunctor - and algebras over endofunctors, once defined, will correspond to non-associative correspondences to their monadic counterparts.

Definition For an endofunctor , we define a -algebra to be an arrow .

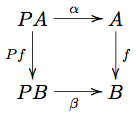

A homomorphism of -algebras is some arrow such that the diagram below commutes:

This homomorphism definition does not need much work to apply to the monadic case as well.

Example: Groups

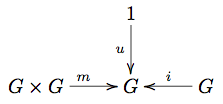

A group is a set with operations , such that is a unit for , is associative, and is an inverse.

Ignoring for a moment the properties, the theory of groups is captured by these three maps, or by a diagram

We can summarize the diagram as

and thus recognize that groups are some equationally defined subcategory of the category of -algebras for the polynomial functor . The subcategory is full, since if we have two algebras and , that both lie within the subcategory that fulfills all the additional axioms, then certainly any morphism will be compatible with the structure maps, and thus will be a group homomorphism.

We shall denote the category of -algebras in a category by , or just if the category is implicitly understood.

This category is wider than the corresponding concept for a monad. We don't require the kind of associativity we would for a monad - we just lock down the underlying structure. This distinction is best understood with an example:

The free monoids monad has monoids for its algebras. On the other hand, we can pick out the underlying functor of that monad, forgetting about the unit and multiplication. An algebra over this structure is a slightly more general object: we no longer require , and thus, the theory we get is that of a magma. We have concatenation, but we can't drop the brackets, and so we get something more reminiscent of a binary tree.

Initial -algebras and recursion

Consider the polynomical functor on the category of sets. It's algebras form a category, by the definitions above - and an algebra needs to pick out one special element 0, and one endomorphism T, for a given set.

What would an initial object in this category of P-algebras look like? It would be an object equipped with maps . For any other pair of maps , we'd have a unique arrow such that

commutes, or in equations such that

Now, unwrapping the definitions in place, we notice that we will have elements in , and the initiality will force us to not have any other elements floating around. Also, intiality will prevent us from having any elements not in this minimally forced list.

We can rename the elements to form something more recognizable - by equating an element in with the number of applications of to . This yields, for us, elements with one function that picks out the , and another that gives us the cussessor.

This should be recognizable as exactly the natural numbers; with just enough structure on them to make the principle of mathematical induction work: suppose we can prove some statement , and we can extend a proof of to . Then induction tells us that the statement holds for all .

More importantly, recursive definitions of functions from natural numbers can be performed here by choosing an appropriate algebra mapping to.

This correspondence between the initial object of is the reason such an initial object in a category with coproducts and terminal objects is called a natural numbers object.

For another example, we consider the functor .

Pop Quiz Can you think of a structure with this as underlying defining functor?

An initial -algebra would be some diagram

such that for any other such diagram

we have a unique arrow such that

commutes.

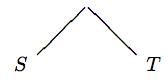

Unwrapping the definition, working over Sets again, we find we are forced to have some element , the image of . Any two elements in the set give rise to some , which we can view as being the binary tree

The same way that we could construct induction as an algebra map from a natural numbers object, we can use this object to construct a tree-shaped induction; and similarily, we can develop what amounts to the theory of structural induction using these more general approaches to induction.

Example of structural induction

Using the structure of -algebras we shall prove the following statement:

Proposition The number of leaves in a binary tree is one more than the number of internal nodes.

Proof We write down the actual Haskell data type for the binary tree initial algebra.

data Tree = Leaf | Node Tree Tree

nLeaves Leaf = 1

nLeaves (Node s t) = nLeaves s + nLeaves t

nNodes Leaf = 0

nNodes (Node s t) = 1 + nNodes s + nNodes t

Now, it is clear, as a base case, that for the no-nodes tree Leaf:

nLeaves Leaf = 1 + nNodes Leaf

For the structural induction, now, we consider some binary tree, where we assume the statement to be known for each of the two subtrees. Hence, we have

tree = Node s t

nLeaves s = 1 + nNodes s

nLeaves t = 1 + nNodes t

and we may compute

nLeaves tree = nLeaves s + nLeaves t

= 1 + nNodes s + 1 + nNodes t

= 2 + nNodes s + nNodes t

nNodes tree = 1 + nNodes s + nNodes t

Now, since the statement is proven for each of the cases in the structural description of the data, it follows form the principle of structural induction that the proof is finished.

In order to really nail down what we are doing here, we need to define what we mean by predicates in a strict manner. There is a way to do this using fibrations, but this reaches far outside the scope of this course. For the really interested reader, I'll refer to [2].

Another way to do this is to introduce a topos, and work it all out in terms of its internal logic, but again, this reaches outside the scope of this course.

Lambek's lemma

What we do when we write a recursive data type definition in Haskell really to some extent is to define a data type as the initial algebra of the corresponding functor. This intuitive equivalence is vindicated by the following

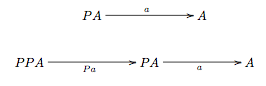

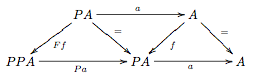

Lemma Lambek If has an initial algebra , then .

Proof Let be an initial -algebra. We can apply again, and get a chain

We can fill out the diagram

to form the diagram

where is induced by initiality, since is also a -algebra.

The diagram above commutes, and thus and . Thus is an inverse to . QED.

Thus, by Lambek's lemma we know that if then for that , the initial algebra - should it exist - will fulfill, which in turn is exactly what we write, defining this, in Haskell code:

List a = Nil | Cons a List

Recursive definitions with the unique maps from the initial algebra

Consider the following -algebra structure on the natural numbers:

l(*) = 0

l(a,n) = 1 + n

We get a unique map from the initial algebra for (lists of elements of type ) to from this definition. This map will fulfill:

f(Nil) = l(*) = 0

f(Cons a xs) = l(a,f(xs)) = 1 + f(xs)

which starts taking on the shape of the usual definition of the length of a list:

length(Nil) = 0

length(Cons a xs) = 1 + length(xs)

And thus, the machinery of endofunctor algebras gives us a strong method for doing recursive definitions in a theoretically sound manner.

Homework

Complete credit will be given for 8 of the 13 questions.

- Find a monad whose algebras are associative algebras: vector spaces with a binary, associative, unitary operation (multiplication) defined on them. Factorize the monad into a free/forgetful adjoint pair.

- Find an endofunctor of whose initial object describes trees that are either binary of ternary at each point, carrying values from some in the leaves.

- Write an implementation of the monad of vector spaces in Haskell. If this is tricky, restrict the domain of the monad to, say, a 3-element set, and implement the specific example of a 3-dimensional vector space as a monad. Hint: [3] has written about this approach.

- Find a -algebra such that the unique map from the initial algebra to results in the function that will reverse a given list.

- Find a -algebra structure on the object that will pick out the first element of a list, if possible.

- Find a -algebra structure on the object that will pick out the sum of the leaf values for the binary tree in the initial object.

- Complete the proof of Lambek's lemma by proving the diagram commutes.

- * We define a coalgebra for an endofunctor to be some arrow . If is a comonad - i.e. equipped with a counit and a cocomposition , then we define a coalgebra for the comonad to additionally fulfill (compatibility) and (counitality).

- (2pt) Prove that if is an endofunctor, then if has an initial algebra, then it is a coalgebra. Does necessarily have a final coalgebra?

- (2pt) Prove that if are an adjoint pair, then forms a comonad.

- (2pt) Describe a final coalgebra over the comonad formed from the free/forgetful adjunction between the categories of Monoids and Sets.

- (2pt) Describe a final coalgebra over the endofunctor .

- (2pt) Describe a final coalgebra over the endofunctor .

- (2pt) Prove that if is a final coalgebra for an endofunctor , then is an isomorphism.